ざっくり何について書かれているのかを把握しやすいようなマインドマップにまとめてみました。情報の抽出にはCopilotを使用しました。ところどころおかしなところがあるのは把握しています。気が向いたら修正したいとは思っていますが、とりあえず、そのまま掲載しています。どこの項に知りたいことが書かれているのかを探す向きにはこういうまとめも有用だろうと思います。実務に重要なポイントは元の文章を確認する必要があります。

もとになったのは平成29年版

Q&A 長いので2つのパートに分割しました

ざっくり何について書かれているのかを把握しやすいようなマインドマップにまとめてみました。情報の抽出にはCopilotを使用しました。ところどころおかしなところがあるのは把握しています。気が向いたら修正したいとは思っていますが、とりあえず、そのまま掲載しています。どこの項に知りたいことが書かれているのかを探す向きにはこういうまとめも有用だろうと思います。実務に重要なポイントは元の文章を確認する必要があります。

もとになったのは令和6年3月27日一部改訂版

What are the molecular targeted drugs for cancers with BRCA1 and BRCA2 gene mutations?

BRCA1およびBRCA2遺伝子変異を持つがんに対する分子標的薬で注目されているのはPARP阻害剤(PARPi)です。現在、FDAに承認されているPARPiは4種類あり、これらはBRCA1/2欠損がんの治療に利用されています(Ragupathi et al., 2023)。

最初に承認されたPARPiであるオラパリブは、BRCA変異を持つ卵巣がん患者の治療において非常に有望な結果を示しています(Venkitaraman, 2009; Tangutoori et al., 2015)。PARPiは、がん細胞のゲノム不安定性を利用してDNA損傷応答を標的とし、従来の化学療法と比較してより腫瘍細胞選択的なアプローチを提供します(O’Connor, 2015)。

PARPiの細胞毒性は、BRCA1/2変異腫瘍の複製が困難なゲノム領域に過剰な複製ストレスを誘導することによると考えられています(Ragupathi et al., 2023)。現在進行中の研究では、PARPiと免疫チェックポイント阻害薬を組み合わせて臨床結果を向上させる方法が探求されています(Ragupathi et al., 2023)。さらに、PARPiは他のさまざまながんタイプに対しても、単独療法および他の治療法との併用療法としての利用が検討されています(Tangutoori et al., 2015)。

このように、PARP阻害剤はBRCA1/2遺伝子変異を持つがん患者にとって新たな希望となる治療法であり、今後の研究と臨床応用が期待されています。

Molecular targeted drugs for cancers with BRCA1 and BRCA2 gene mutations primarily focus on PARP inhibitors (PARPi). Four FDA-approved PARPi are currently available for treating BRCA1/2-deficient cancers (Ragupathi et al., 2023). Olaparib, the first approved PARPi, has shown promise in treating ovarian cancer patients with BRCA mutations (Venkitaraman, 2009; Tangutoori et al., 2015). PARPi exploit the genomic instability of cancer cells by targeting the DNA damage response, offering a more selective approach compared to traditional chemotherapy (O’Connor, 2015). The cytotoxic effect of PARPi is believed to result from inducing excessive replication stress in difficult-to-replicate genomic regions of BRCA1/2 mutated tumors (Ragupathi et al., 2023). Ongoing research explores combining PARPi with immuno-oncology drugs to enhance clinical outcomes (Ragupathi et al., 2023). Additionally, PARPi are being investigated for use in various other cancer types, both as monotherapies and in combination with other treatments (Tangutoori et al., 2015).

References

O’Connor, M. J. (2015). Targeting the DNA Damage Response in Cancer. 60(4), 547–560. https://doi.org/10.1016/j.molcel.2015.10.040

Ragupathi, A., Singh, M., Perez, A. M., & Zhang, D. (2023). Targeting the BRCA1/2 deficient cancer with PARP inhibitors: Clinical outcomes and mechanistic insights. 11, 1133472. https://doi.org/10.3389/fcell.2023.1133472

Tangutoori, S., Baldwin, P., & Sridhar, S. (2015). PARP inhibitors: A new era of targeted therapy. 81(1), 5–9. https://doi.org/10.1016/j.maturitas.2015.01.015

Venkitaraman, A. R. (2009). Targeting the molecular defect in BRCA-deficient tumors for cancer therapy. 16(2), 89–90. https://doi.org/10.1016/j.ccr.2009.07.011

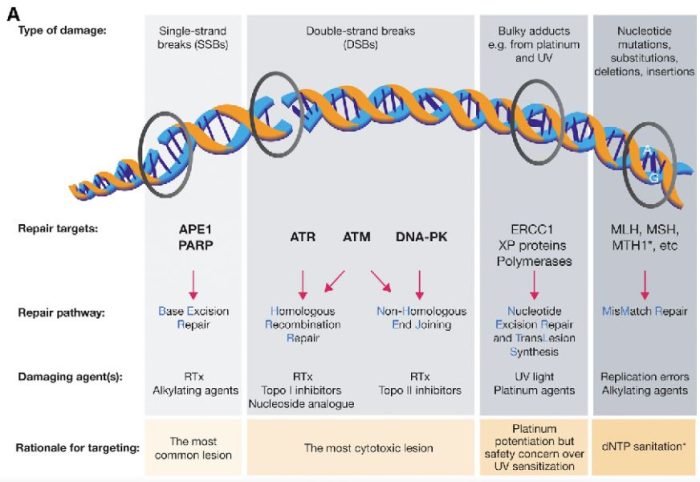

図の解説:PARP(ポリ(ADP-リボース)ポリメラーゼ)は、主に一重鎖DNA切断(single-strand breaks, SSB)の修復に関与しています。具体的には、以下のようなDNAダメージを修復します:

PARPは、これらの損傷を修復することで、細胞のゲノム安定性を維持し、細胞の生存を助けます。しかし、BRCA1やBRCA2遺伝子に変異がある場合、これらの修復経路が正常に機能しないため、PARP阻害剤(PARPi)はこれらのがん細胞に対して特に効果的です。PARPiはPARPの機能を阻害することで、がん細胞に蓄積するDNA損傷を増加させ、最終的にがん細胞の死を誘導します。

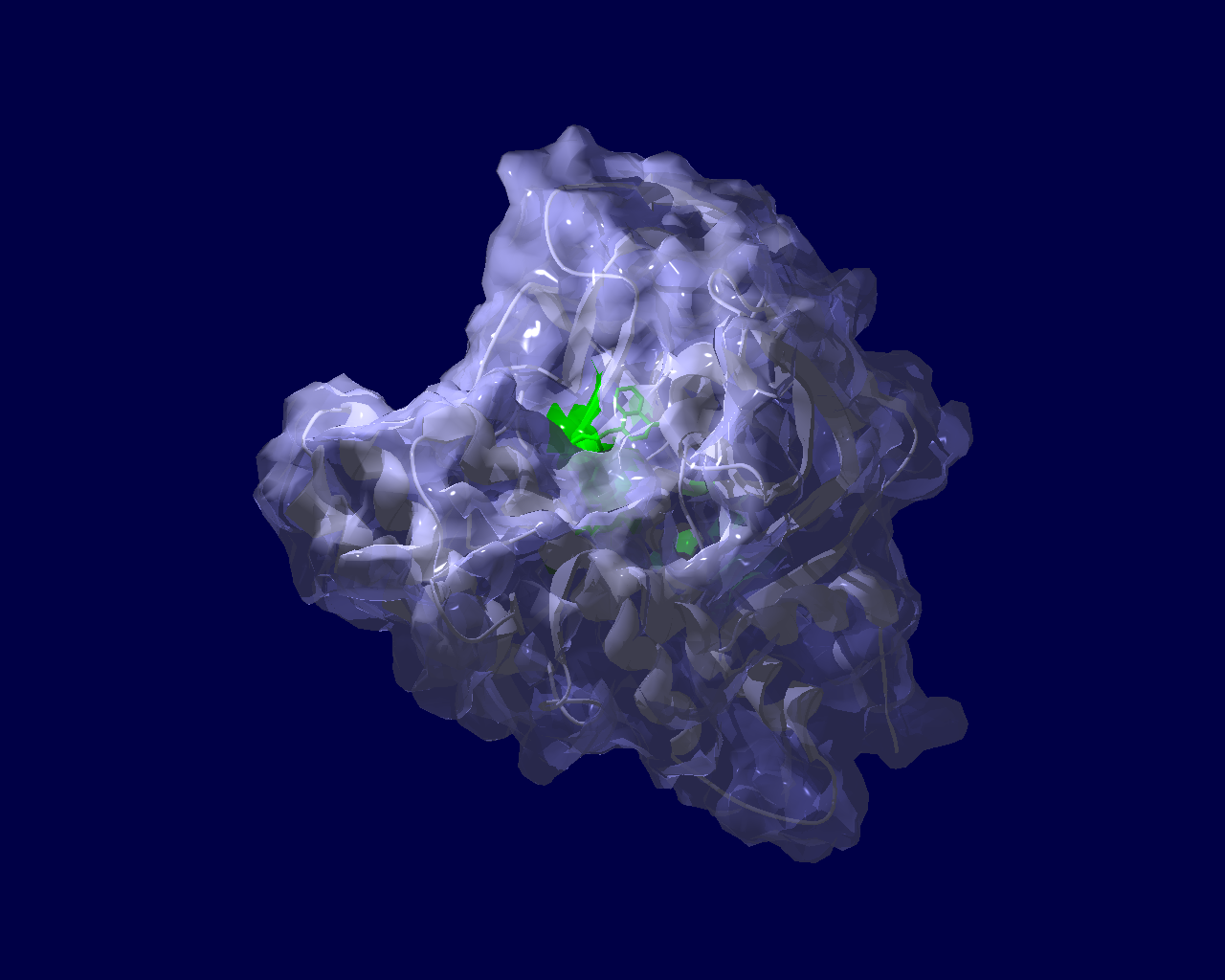

PARP分子と、Olaparibの結合を結晶構造で眺めてみました。この構造だと、PARPたんぱく質に結合したOlaparibは分子の中に深く埋もれているように見えます。(結合した後にたんぱく質の構造変化が起きる?)緑色の分子がolaparibで、それ以外が human PARPのcatalytic domain。下図は下記文献のデータを基にわたくしが作図しました。

Ogden, T. E. H., Yang, J.-C., Schimpl, M., Easton, L. E., Underwood, E., Rawlins, P. B., McCauley, M. M., Langelier, M.-F., Pascal, J. M., Embrey, K. J., & Neuhaus, D. (2021). Dynamics of the HD regulatory subdomain of PARP-1; substrate access and allostery in PARP activation and inhibition. 49(4), 2266–2288.

https://doi.org/10.1093/nar/gkab020

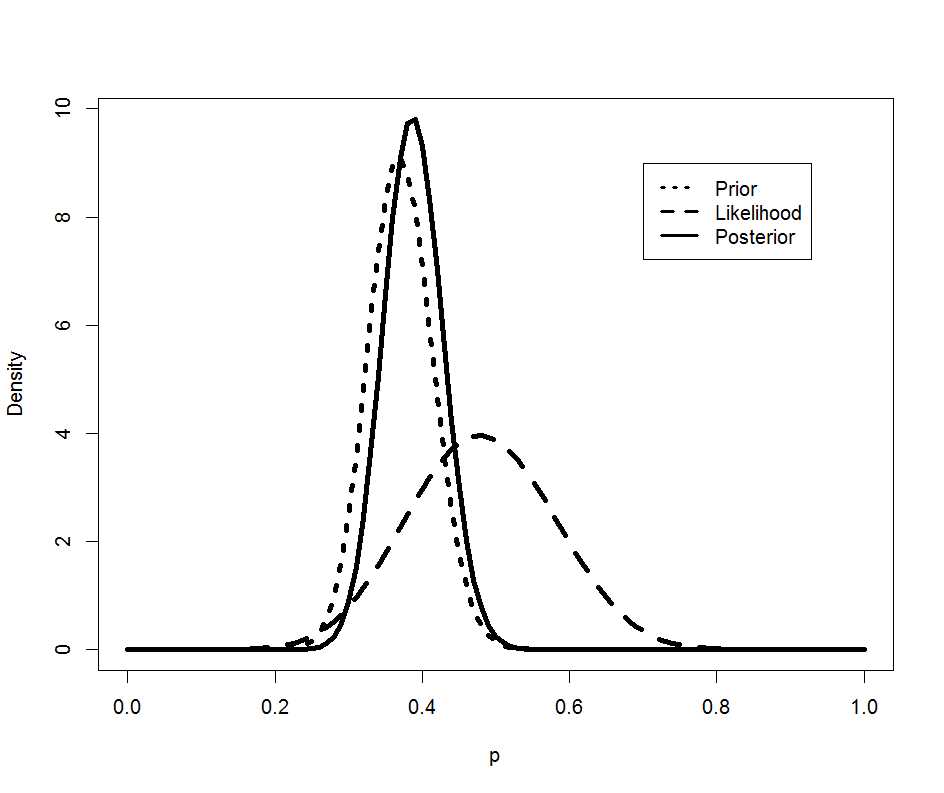

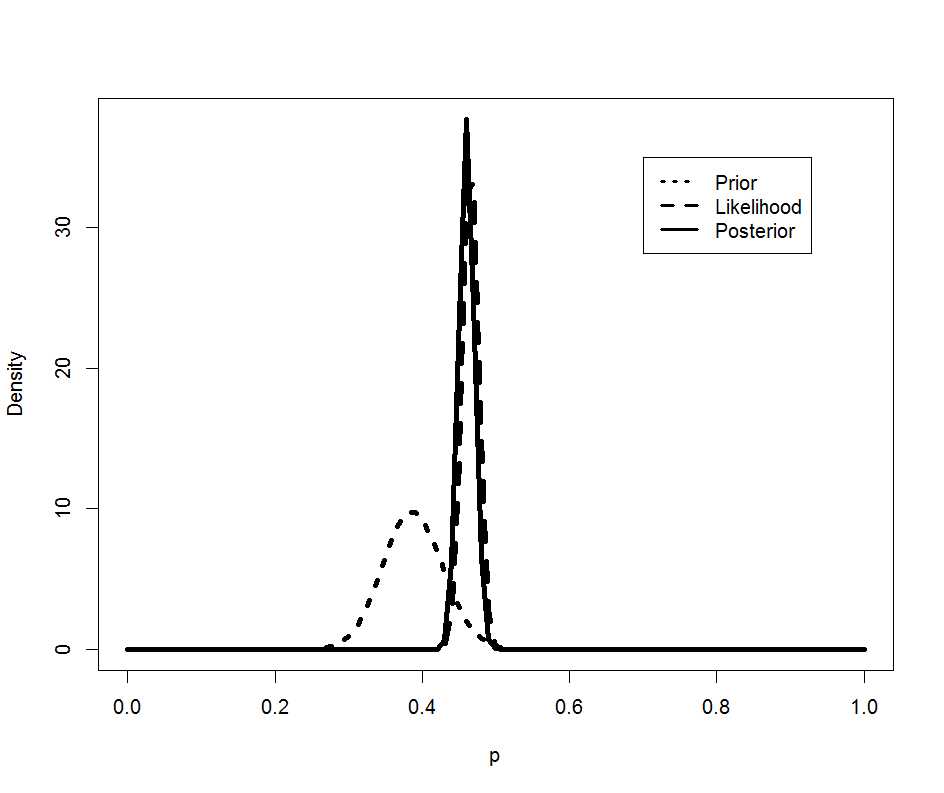

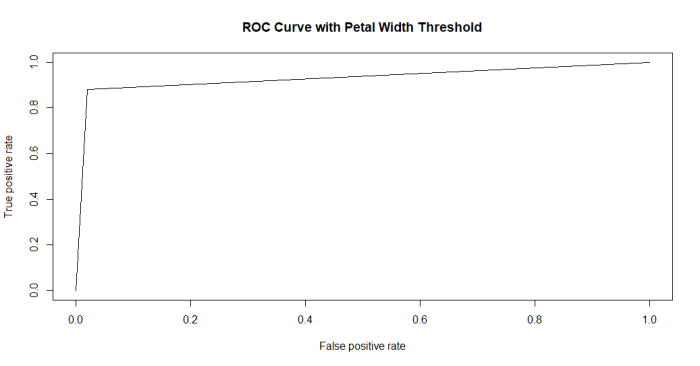

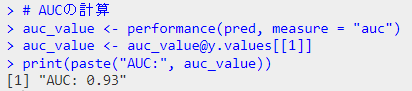

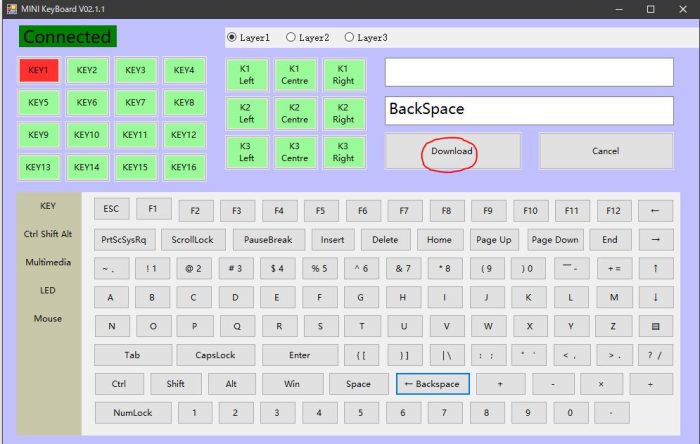

アイキャッチ画像のような図を論文で見ていて、どうやって描いたもので何を意味するのか自分でもとデータから図を描きつつ見方を学ぼうという事で書籍を開きながら試してみました。その時のチョコっとした学びを書き留めた備忘録です。

#初心者によるやってみた系の記事

参考にしたのは「ゼロから実践する 遺伝統計学セミナー〜疾患とゲノムを結びつける」という本です。参考というか、このホームページに書いていることは、この本に書かれていることをたどっただけです。書籍にはCGYWINのインストール、plinkの使い方から、マンハッタンプロットまで丁寧に書かれていますので、この書籍を読めば私のホームページは見る価値があまりないかもしれません。

ここで、今回使用したRのスクリプトを掲載しようと思っていたのですが、そのスクリプトというのが上記書籍を購入したらダウンロードできるというデータの中にあるスクリプトで、ごくわずか変更(ファイル格納ディレクトリやデータファイル名を変更)した程度なので、さすがに掲載したらまずそうだと思いとどまり、別の例で示します。

CRANから、Rのパッケージqqmanをインストールしてあるとして次になります。

library(qqman) manhattan(gwasResults, chr="CHR", bp="BP", snp="SNP", p="P" )

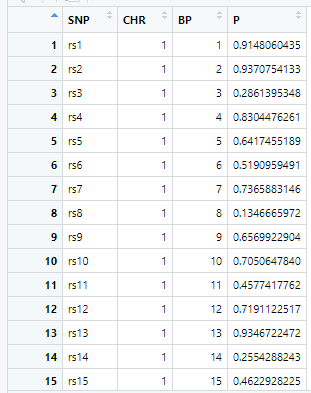

gwasResultsはパッケージqqman付属のデータで、次のようにSNPの名称・染色体・位置・表現型と当該SNPとの関連性がないと仮定した場合の確率P値の4項目のデータが16470行続きます。

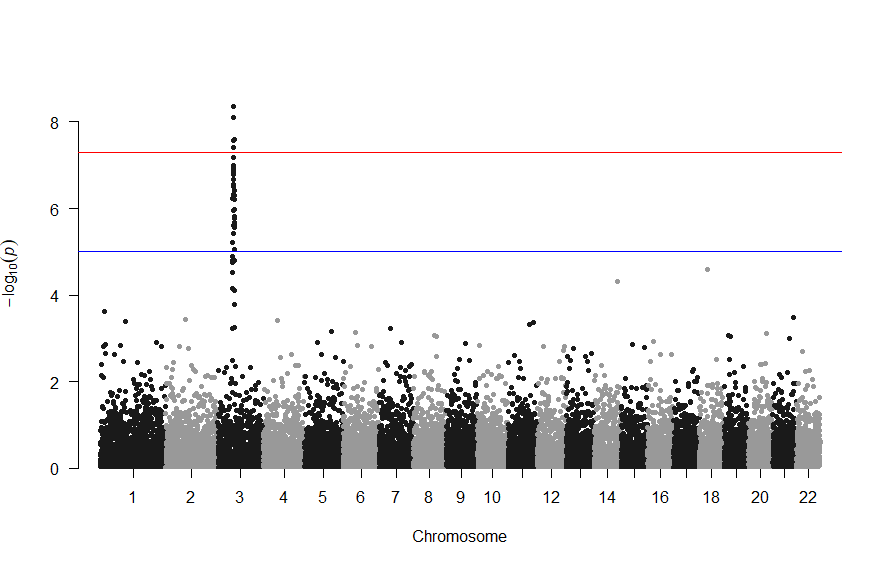

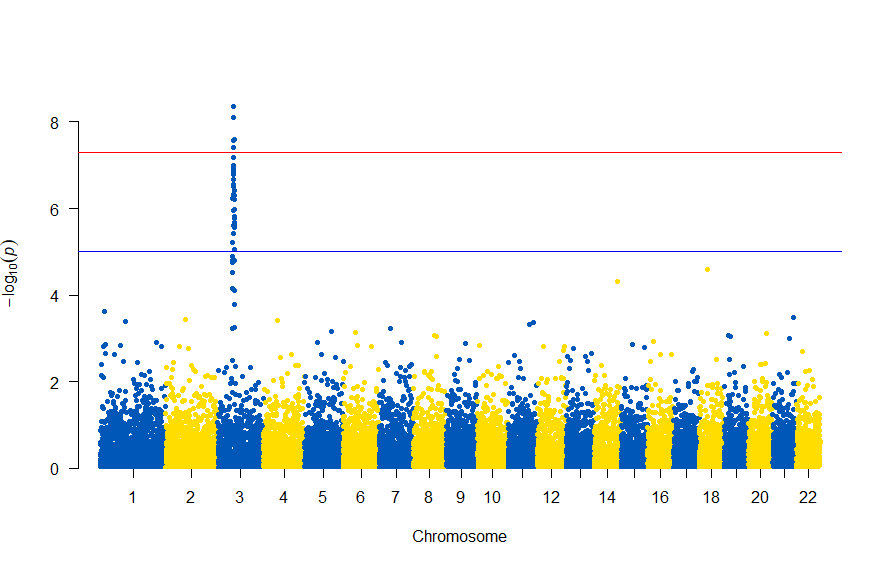

で、このスクリプトの結果は次の図のようになります。

色が寂しいので、同じデータでウクライナを思わせるカラーで表現すると

# Load the library

library(qqman)

# Make the Manhattan plot on the gwasResults dataset

manhattan(gwasResults, chr="CHR", bp="BP", snp="SNP", p="P", col = c("#0057B7", "#FFDD00"))

次のようになります

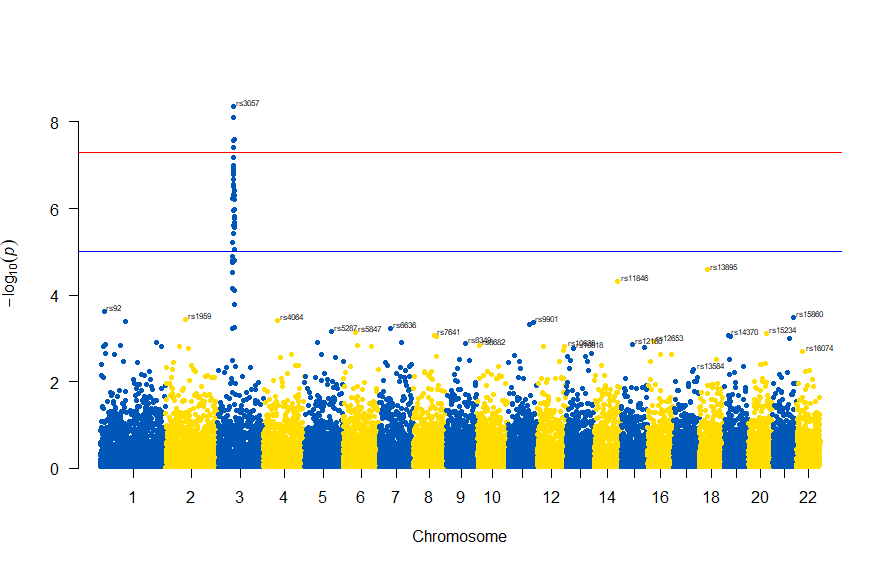

図の中のSNPを表示するには , annotatePval = 0.01 を追加します

# Load the library

library(qqman)

# Make the Manhattan plot on the gwasResults dataset

manhattan(gwasResults, chr="CHR", bp="BP", snp="SNP", p="P", col = c("#0057B7", "#FFDD00"), annotatePval = 0.01)

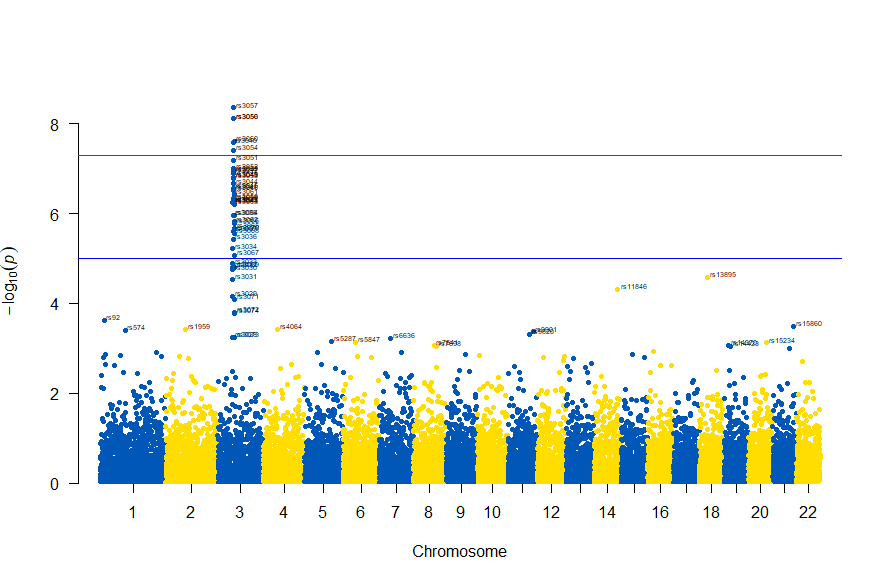

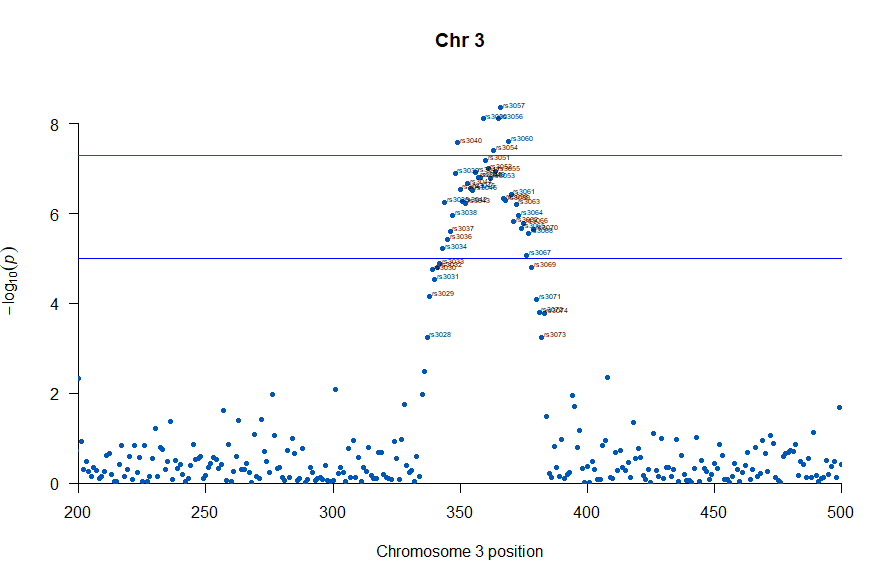

3番染色体の集中していくつものSNPが高い-log10(p)値を示すのに頂上の変異しか名前が表示されないので annotateTop = FALSE というオプションをつけてみました。

# Load the library

library(qqman)

# Make the Manhattan plot on the gwasResults dataset

manhattan(subset(gwasResults, CHR == 3), chr="CHR", bp="BP", snp="SNP", p="P"

, col = c("#0057B7", "#FFDD00"), annotatePval = 0.001

, annotateTop = FALSE, main = "Chr 3")

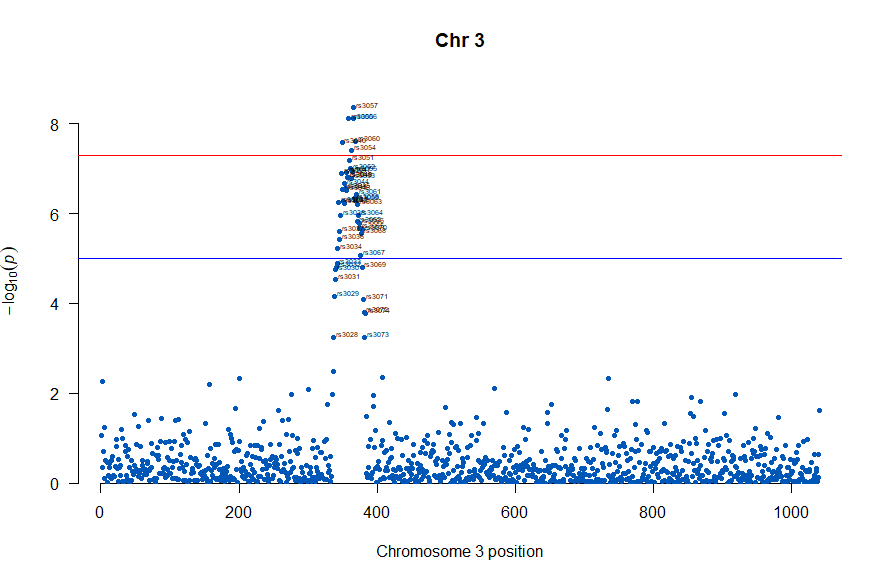

3番目の染色体に関連のありそうなSNPが集中しているようなので3番目のsubsetを表示してみます。肝は subset(gwasResults, CHR ==3) の個所です。

# Load the library

library(qqman)

# Make the Manhattan plot on the gwasResults dataset

manhattan(subset(gwasResults, CHR == 3), chr="CHR", bp="BP", snp="SNP", p="P"

, col = c("#0057B7", "#FFDD00"), annotatePval = 0.001

, annotateTop = FALSE, main = "Chr 3")

気になるあたりをもう少し拡大します

# Load the library

library(qqman)

# Make the Manhattan plot on the gwasResults dataset

manhattan(subset(gwasResults, CHR == 3), chr="CHR", bp="BP", snp="SNP", p="P"

, col = c("#0057B7", "#FFDD00"), annotatePval = 0.001

, annotateTop = FALSE, main = "Chr 3", xlim = c(200,500))

上記の作図は単純で、各Chromosome上のそれぞれの位置でp値をプロットしただけのものなので、結局はp値を計算して大きな外れ値(-> association)を示す位置を目視で確認しやすくしたものだという事がわかりました。

肝となるのはp値の計算になります。それでは、p値をどうやって計算していたかというと、複数人のSNPのデータと、それぞれのサンプル(の人)の興味のある表現型のデータを用いて表現型と無関係なSNPか表現型とassociateしているSNPかを計算しています。

plink.exe (windows用)がインストールされていて、冒頭の書籍の付録のファイル 1KG_EUR_QC.bed, 1KG_EUR_QC.bim, 1KG_EUR_QC.fam とかのデータがあったとして、コマンドプロンプトで次を実施しました。

plink.exe --bfile 1KG_EUR_QC --out 1KG_QC_Pheno1 --pheno phenotype1.txt --logistic --ci 0.95

上記で作成されるファイルから、p値等のデータを抽出するのにawkを使いました。awkを使うのに、MacOSだとawkはデフォルトで入っているのですが、今回はwindowsを使いましたのでCYGWINで実行しました。

awk '{print $2"\t"($1*100000000000+$3)"\t"$12}' 1KG_QC_Pheno1.assoc.logistic > 1KG_QC_Pheno1.assoc.logistic.P.txt

この出力で生成される 1KG_QC_Pheno1.assoc.logistic.P.txt ファイルを上の方で記したRスクリプトのインプットとすることで、マンハッタンプロットを描くことができました。

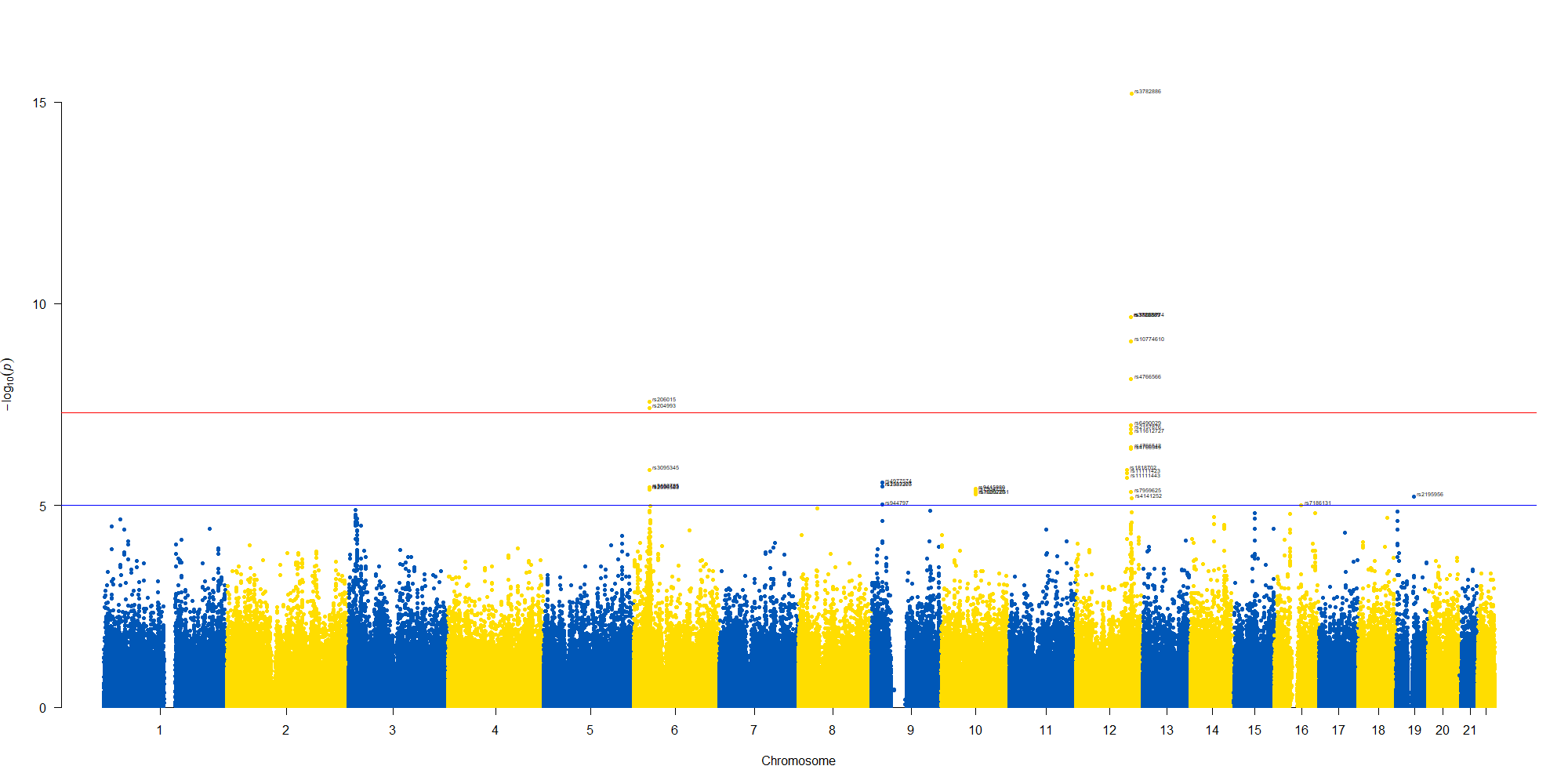

さらなる練習用という事で「心筋梗塞1666症例および対照健常者3198名のGWAS」データが公開されており、使用についての制限がないためこちらのデータで試してみました。

(制限がない=NBDC非制限公開データとは、アクセスに制限を設けることなく、誰でも利用することが可能な公開データです。)

次のサイトからダウンロードしました。

https://humandbs.biosciencedbc.jp/

「ゼロから実践する 遺伝統計学セミナー〜疾患とゲノムを結びつける」に従った例でawkで行っっていた、行の抽出の部分はをsqlで行いました。

好みの問題かもしれませんが、windowsでlinux emulatorみたいなのを稼働させてawk走らせるより、Microsoft AccessのSQLで抽出するのが素直に開発しやすいと感じました。

SELECT Release_L12vsUniv06_AllSample.[#SNPID] AS SNP, Release_L12vsUniv06_AllSample.chr AS chr, Release_L12vsUniv06_AllSample.chrloc AS location, Release_L12vsUniv06_AllSample.ptrend AS p INTO [0010 GWAS_results] FROM Release_L12vsUniv06_AllSample;

で作図は次のようにしました。

library(readxl)

library(qqman)

GWAS_results <- read_excel("0010 GWAS_results.xlsx")

manhattan(GWAS_results, chr="chr", bp="location", snp="SNP", p="p"

, col = c("#0057B7", "#FFDD00"), annotatePval = 0.00001

, annotateTop = FALSE)

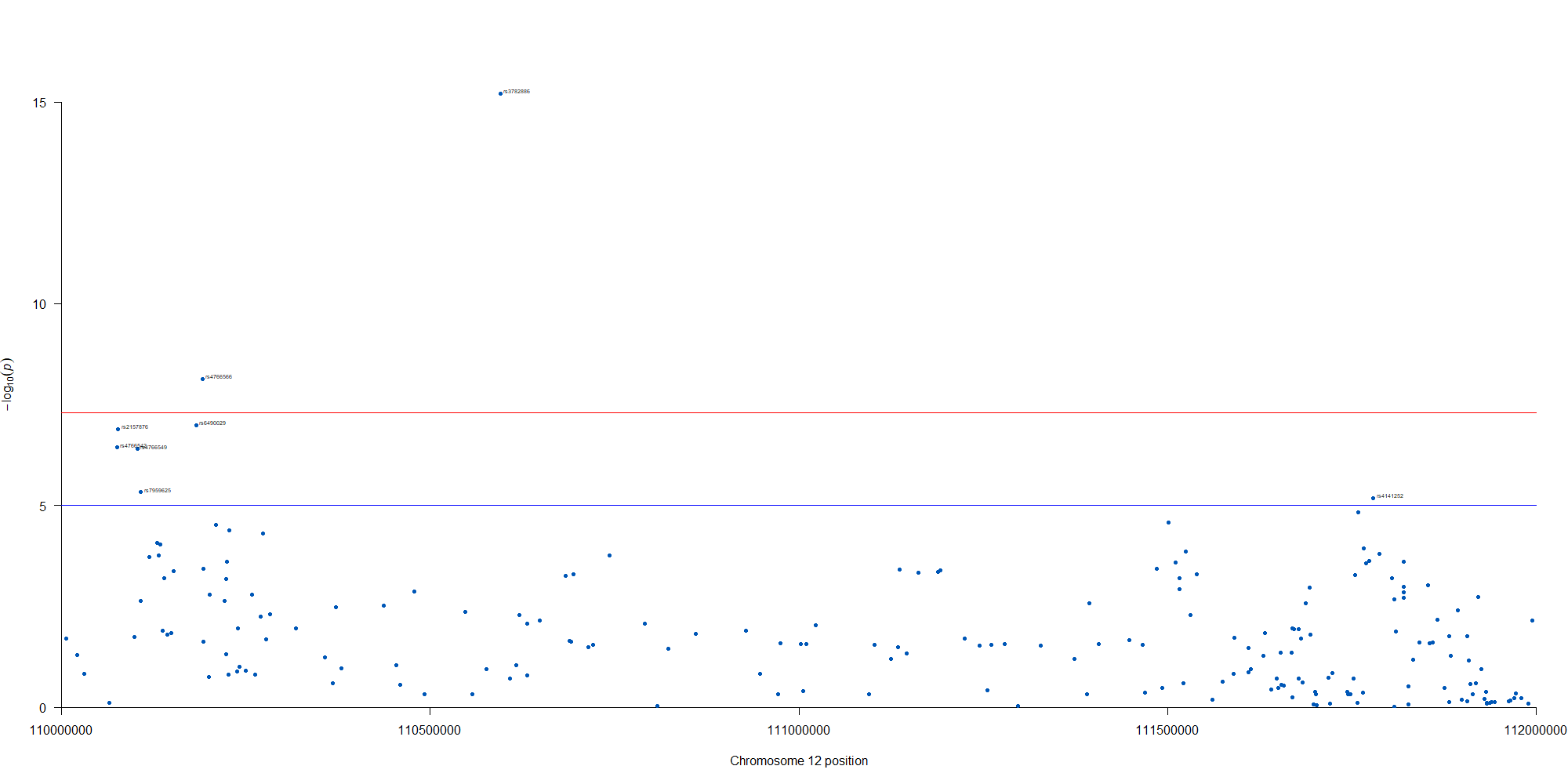

manhattan(subset(GWAS_results, chr == 12), chr="chr", bp="location", snp="SNP", p="p"

, col = c("#0057B7", "#FFDD00"), annotatePval = 0.00001

, annotateTop = FALSE, xlim = c(1.1e+8, 1.12e+08))

12番染色体の3’側に不均衡が集中している様なのでそのあたりを拡大したりしました。

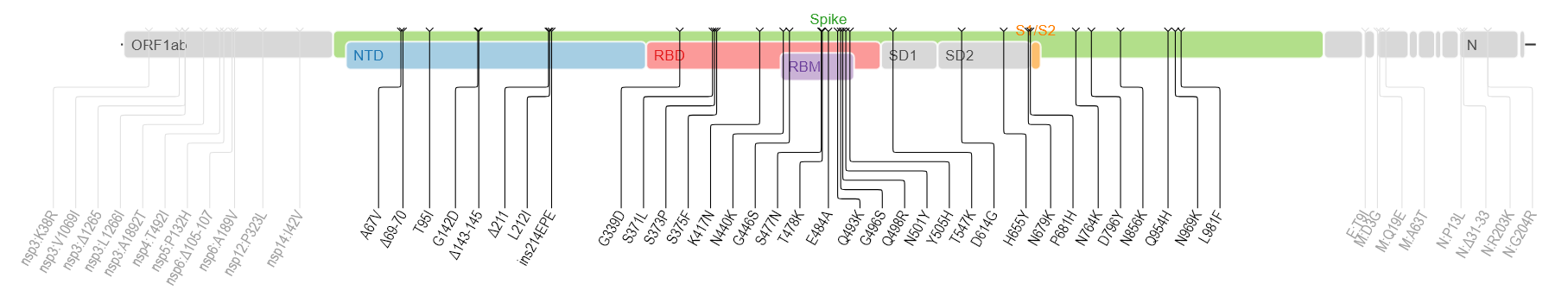

2021.11.26 WHOがCOVID-19の原因ウイルスであるSARS-CoV-2の新規の変異(B.1.1.529)を評価するために、アドバイザリーグループ(The Technical Advisory Group on SARS-CoV-2 Virus Evolution (TAG-VE))を開催しました。

南アフリカの最近の感染拡大と、B.1.1.529の検出の増加がcoinciding (一致している?)していて、予備的な検証ではこのバリアントによる再感染のリスクが高いことが示唆されているそうです。

現在のSARS-CoV-2PCR診断では、引き続きこの変異体が検出されます。いくつかの研究室で、広く使用されている1つのPCRテストでは、3つのターゲット遺伝子の1つが検出されない(S遺伝子ドロップアウトまたはS遺伝子ターゲット障害と呼ばれる)ため、シーケンスの確認が完了するまで、このテストをこのバリアントのマーカーとして使用できることが示されています。このアプローチを使用すると、このバリアントは以前の感染の急増よりも速い速度で検出されており、このバリアントが感染拡大にアドバンテージを持っている可能性があることを示唆しています。

アルファベット順で ν(ニュー)とξ(クサイ)をスキップしてのο(オミクロン)だそうです。習という中国で多い名前や英語の単語のnewを避けるためだそうです。

> avoid confusion with the English word “new” and the common Chinese surname Xi

B.1.1.529は多くの変異を示します。(下図はwiki pediaから)多くの箇所に変異が検出されます。

spike蛋白のアミノ酸残基にかかる変異だけでも以下のものが含まれるそうです。

A67V, Δ69-70, T95I, G142D, Δ143-145, Δ211, L212I, ins214EPE, G339D, S371L, S373P, S375F, K417N, N440K, G446S, S477N, T478K, E484A, Q493K, G496S, Q498R, N501Y, Y505H, T547K, D614G, H655Y, N679K, P681H, N764K, D796Y, N856K, Q954H, N969K, L981F

どう、料理したら良いかなぁ

新型コロナウイルス肺炎COVID-19の原因ウイルスである、SARS-CoV-2ウイルスのSpikeタンパク質のアミノ酸配列についてみてみました。一般にウイルスで変異のない株を野生型と呼びます。野生型のタンパク質のアミノ酸残基の配列を見ると、484番目のアミノ酸残基がE(Glutamic Acid, Glu)で、501番目のアミノ酸残基はN(Asparagine, Asn)です。

ちなみに変異の書き方ですが、この484番目のEがK(Lysine, Lys)に変異した場合E484Kと表記します。同様に501番目のNがY(Tyrosine, Tyr)に変異した場合にN501Yと表記します。

LOCUS QOS45029 1273 aa linear SYN 28-OCT-2020 DEFINITION SARS-CoV-2 spike [synthetic construct]. ACCESSION QOS45029 VERSION QOS45029.1 DBSOURCE accession MW036243.1

KEYWORDS . SOURCE synthetic construct ORGANISM synthetic construct

other sequences; artificial sequences.

REFERENCE 1 (residues 1 to 1273)

AUTHORS Wussow,F., Chiuppesi,F. and Diamond,D.

TITLE Development of a Multi-Antigenic SARS-CoV-2 Vaccine Using a

Synthetic Poxvirus Platform

JOURNAL Unpublished

REFERENCE 2 (residues 1 to 1273)

AUTHORS Wussow,F., Chiuppesi,F. and Diamond,D.

TITLE Direct Submission

JOURNAL Submitted (23-SEP-2020) Hematology, City of Hope, 1500 E Duarte Rd,

Duarte, CA 91010, USA

FEATURES Location/Qualifiers

source 1..1273

/organism="synthetic construct"

/db_xref="taxon:32630"

/clone="C35/41"

Protein 1..1273

/product="SARS-CoV-2 spike"

CDS 1..1273

/coded_by="MW036243.1:145058..148879"

/transl_table=11

ORIGIN

1 mfvflvllpl vssqcvnltt rtqlppaytn sftrgvyypd kvfrssvlhs tqdlflpffs

61 nvtwfhaihv sgtngtkrfd npvlpfndgv yfasteksni irgwifgttl dsktqslliv

121 nnatnvvikv cefqfcndpf lgvyyhknnk swmesefrvy ssannctfey vsqpflmdle

181 gkqgnfknlr efvfknidgy fkiyskhtpi nlvrdlpqgf saleplvdlp iginitrfqt

241 llalhrsylt pgdsssgwta gaaayyvgyl qprtfllkyn engtitdavd caldplsetk

301 ctlksftvek giyqtsnfrv qptesivrfp nitnlcpfge vfnatrfasv yawnrkrisn

361 cvadysvlyn sasfstfkcy gvsptklndl cftnvyadsf virgdevrqi apgqtgkiad

421 ynyklpddft gcviawnsnn ldskvggnyn ylyrlfrksn lkpferdist eiyqagstpc

481 ngvEgfncyf plqsygfqpt Ngvgyqpyrv vvlsfellha patvcgpkks tnlvknkcvn

541 fnfngltgtg vltesnkkfl pfqqfgrdia dttdavrdpq tleilditpc sfggvsvitp

601 gtntsnqvav lyqdvnctev pvaihadqlt ptwrvystgs nvfqtragcl igaehvnnsy

661 ecdipigagi casyqtqtns prrarsvasq siiaytmslg aensvaysnn siaiptnfti

721 svtteilpvs mtktsvdctm yicgdstecs nlllqygsfc tqlnraltgi aveqdkntqe

781 vfaqvkqiyk tppikdfggf nfsqilpdps kpskrsfied llfnkvtlad agfikqygdc

841 lgdiaardli caqkfngltv lpplltdemi aqytsallag titsgwtfga gaalqipfam

901 qmayrfngig vtqnvlyenq klianqfnsa igkiqdslss tasalgklqd vvnqnaqaln

961 tlvkqlssnf gaissvlndi lsrldkveae vqidrlitgr lqslqtyvtq qliraaeira

1021 sanlaatkms ecvlgqskrv dfcgkgyhlm sfpqsaphgv vflhvtyvpa qeknfttapa

1081 ichdgkahfp regvfvsngt hwfvtqrnfy epqiittdnt fvsgncdvvi givnntvydp

1141 lqpeldsfke eldkyfknht spdvdlgdis ginasvvniq keidrlneva knlneslidl

1201 qelgkyeqyi kwpwyiwlgf iagliaivmv timlccmtsc csclkgccsc gscckfdedd

1261 sepvlkgvkl hyt

//

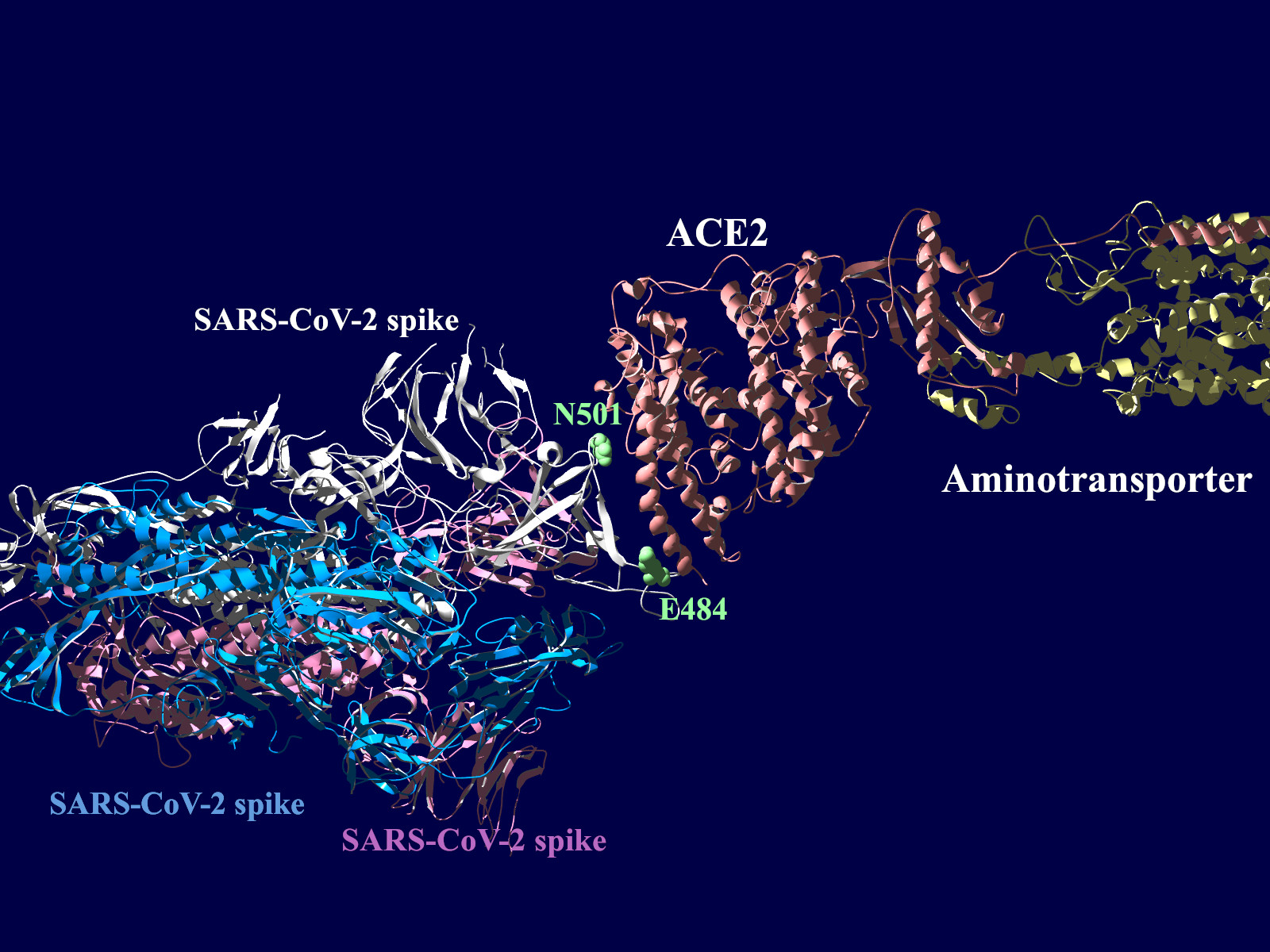

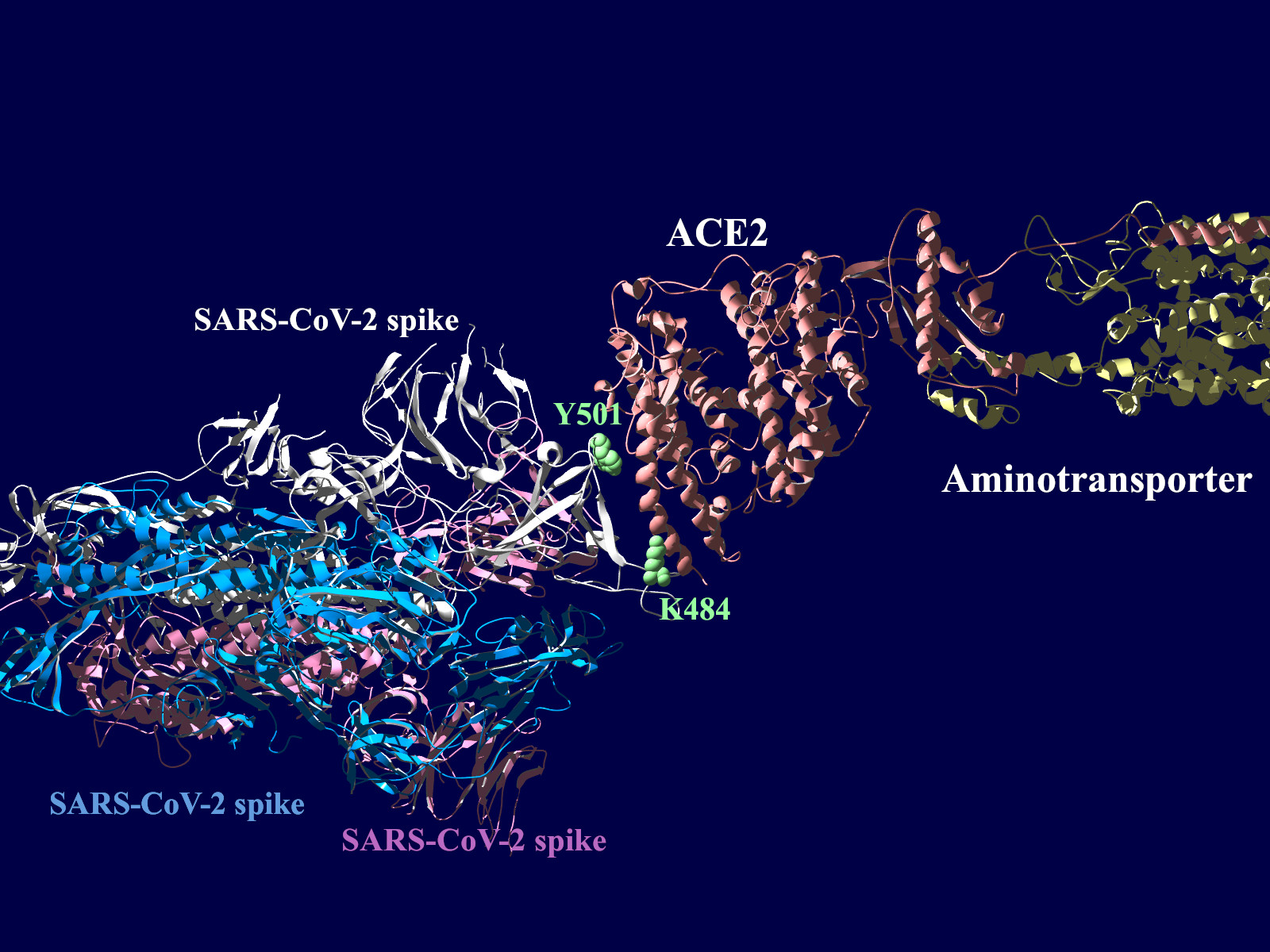

多くの変異が発生するはずのウイルスで、E484KやN501Yの変異は感染性や伝播性の増加が懸念されるとされています。この変異のある場所は、ヒトの細胞に侵入する際にまず結合する分子であるangiotensin-converting enzyme2 (ACE2)との結合に重要だとされています。このあたりを昨年も見た記憶がありますが、変異のウイルスが国内でも報告されるようになってきましたので、その変異の起こる部分が立体的にどういう位置にあたるのか、SARS-CoV-2 SpikeとAEC2が結合してる立体構造を調べたデータの公開データを元に見てみました。まずは野生型のSpikeで示します。緑色のモコモコしたのが変異すると悪い性質を獲得するのではないかと心配されている場所のアミノ酸残基を示します。(下図)

上図の当該のアミノ酸残基を、変異したアミノ酸残基に置き換えた図もしめします。(下図)側鎖のエネルギーだけ小さくなるように調整はしましたが、示された構造全体は動かない設定ですので本当にこの形になっているとは期待できません。

その変異部分の拡大図です(下図)

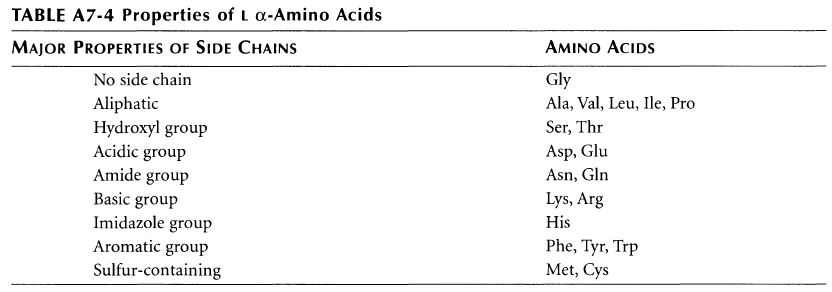

アミノ酸の基本的な分類に荷電の陽性・陰性があります。E484Kは484番目のEがKに変異した事を示します。野生型のEは陰性荷電を示す酸性極性側鎖アミノ酸で、一方変異型のKは陽性荷電を示す塩基性アミノ酸です。感染性等が増加する懸念は、荷電が逆転することでACE2への結合に変化が起きると推定されることから来ているものと思われます。また、ワクチンの効果が減弱するという懸念もあります。この懸念は、ワクチンの効果が結合領域(328-533aa)に対する抗体によるところがあって、この変異が結合領域の中心付近の484aaに存在している点に加えて、荷電が逆転することで抗体の結合にも変化が起きると推定されるところからきているのではないかと思います。

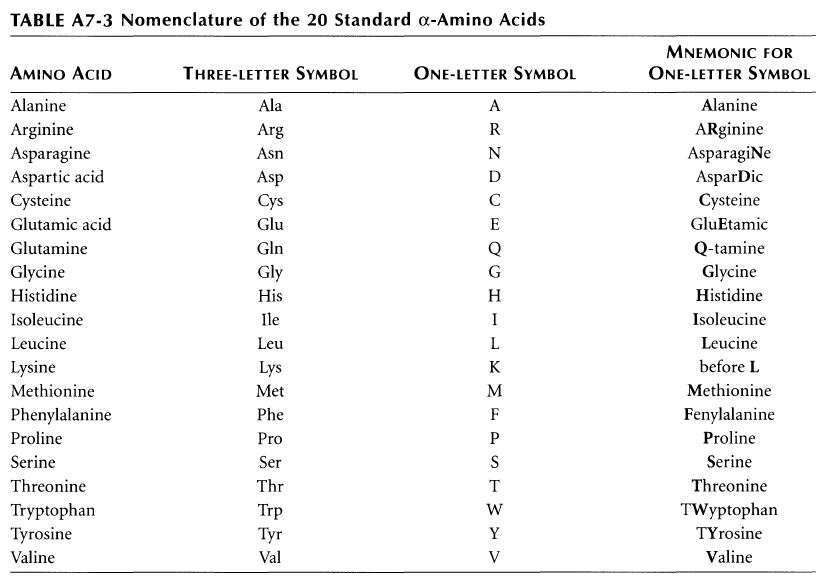

ちなみに基礎知識としてアミノ酸側鎖の性質と、略号をまとめた物をコールドスプリングハーバー(CSH)の実験プロトコール集のような書籍のMolecula Cloning a laboratory manual volume 3から抜粋です。E (Glu)がAcidic groupで、K(Lys)がBasic groupとなっています。

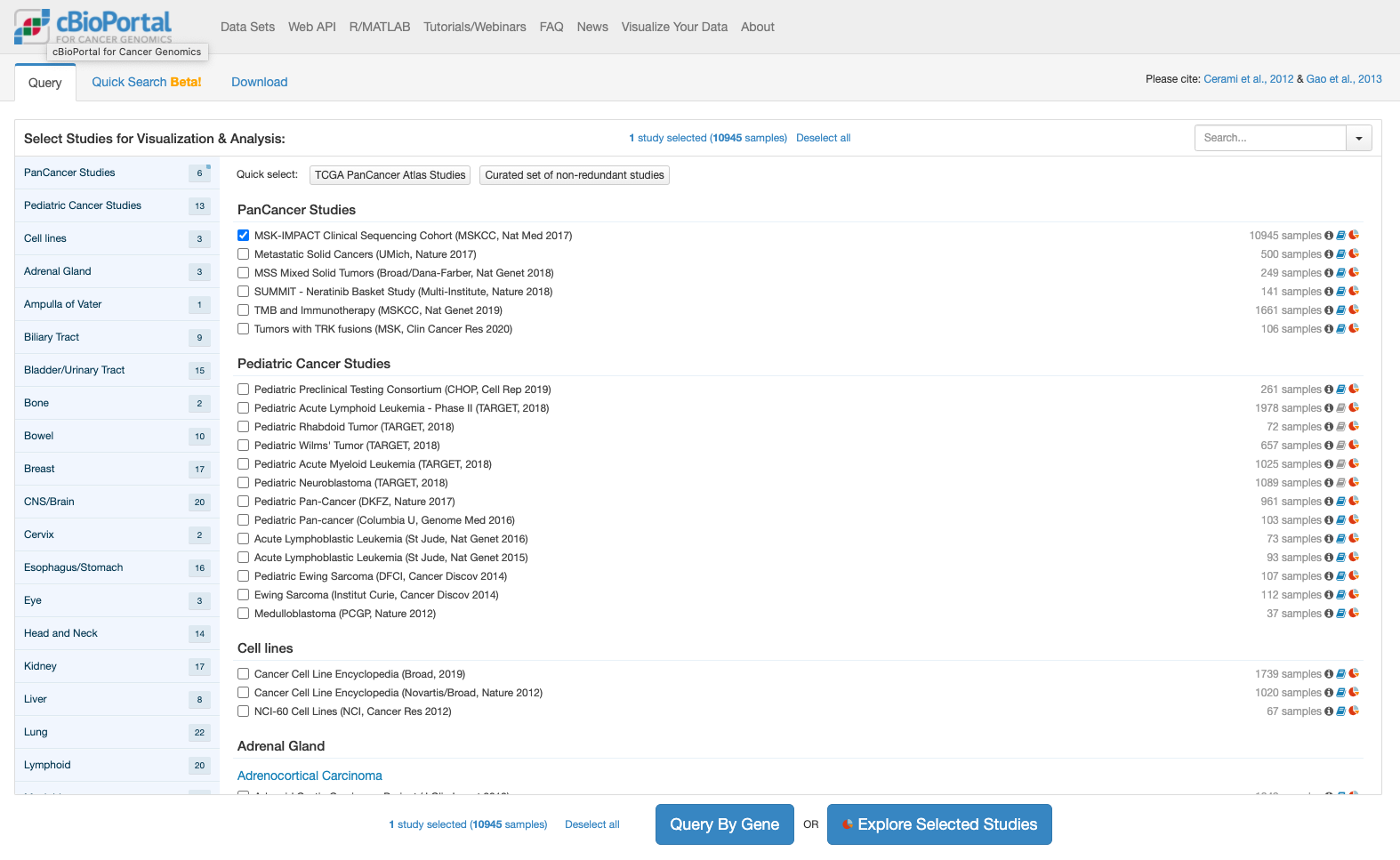

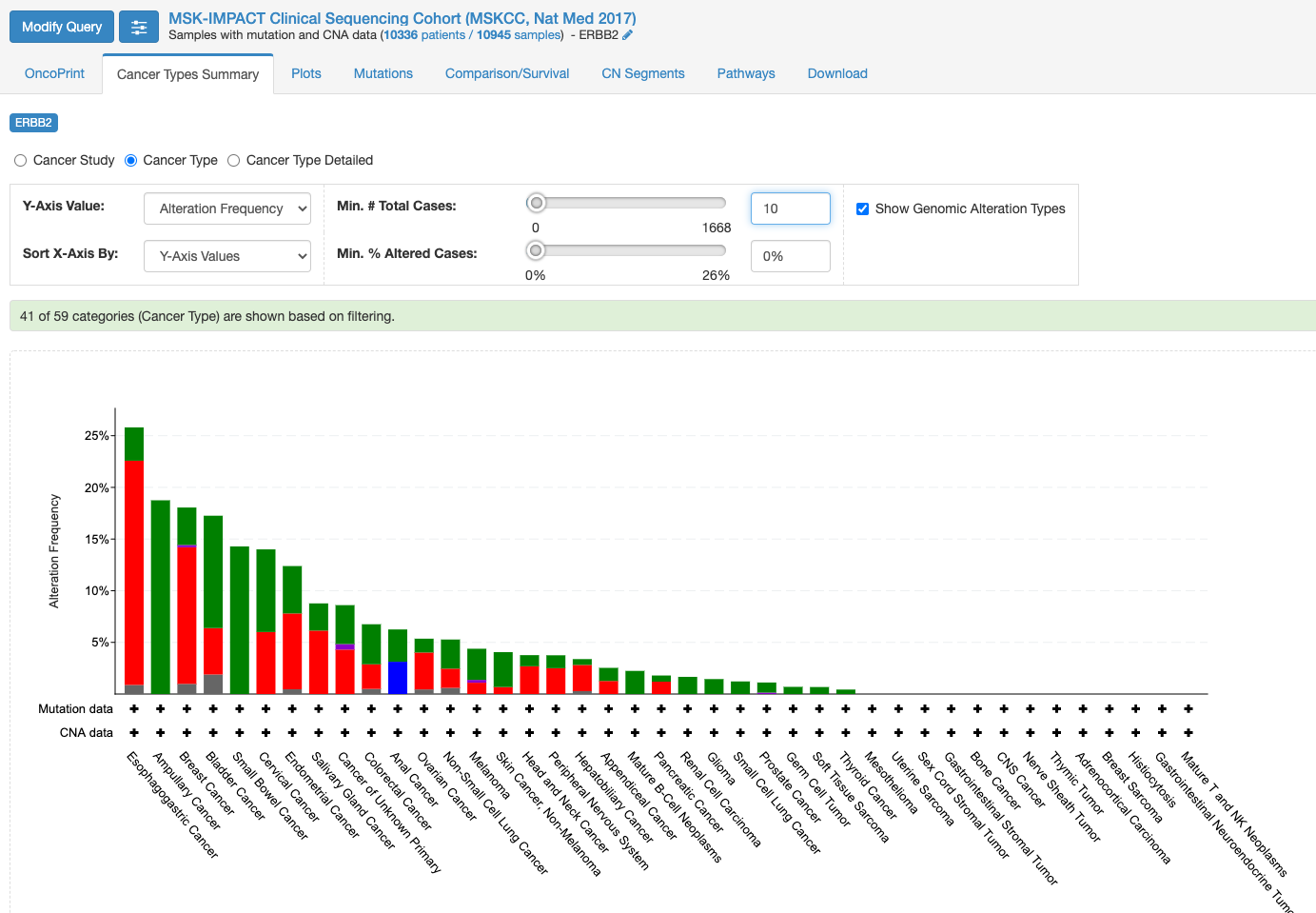

cBioPortal for Cancer Genomicsとは何か、という問いについてはオリジナルのサイトをみていただくのが速いのでしょう。公開された遺伝子関連情報(変異情報、遺伝子発現情報、臨床情報他)と解析ツールを組み合わせたもの、というのが私の理解です。これを使って何が判るのか、ということを少し触ってみましたので、メモしてゆきます。

今回はまず第一弾として遺伝子変異にかかる情報を表示してみました。

cBioPortalでは解析しやすい形で格納されたデータが提供されています。今回はとりあえずこの提供されたデータを表示することにします。次の図はcBioPortalのトップページです。

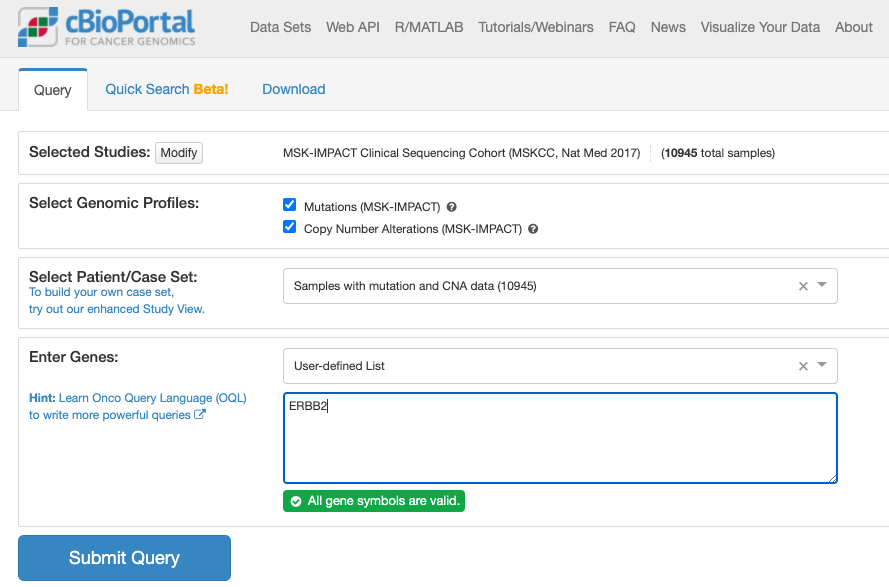

上記でボタンをクリックすると推移する画面が次です。

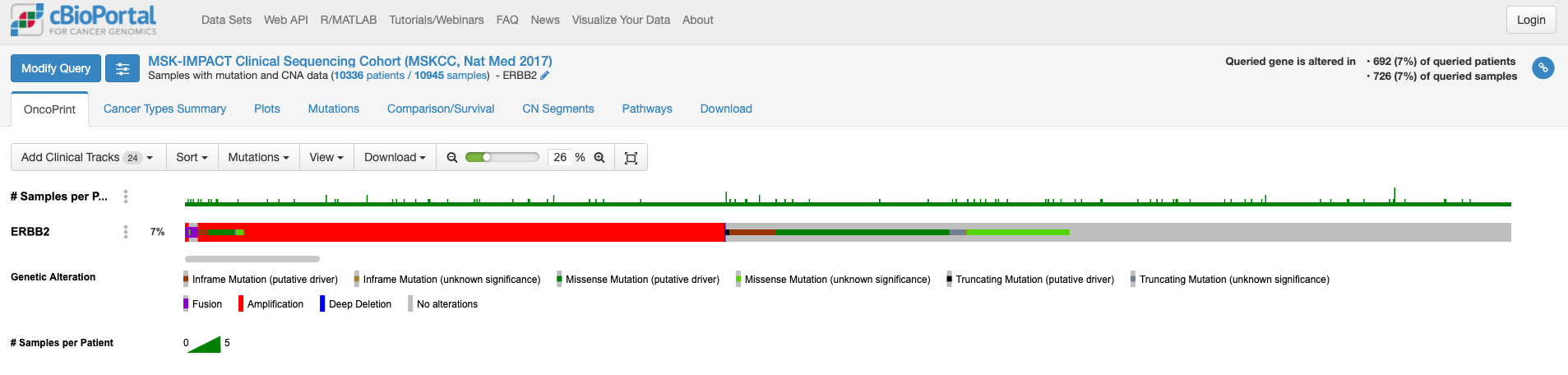

上記でクリックしてしばらくすると表示される画面が次の通りです。

この画面はOnco Printというタブの情報が示されています。赤くて太いラインが目に入ります。下のレジェンドをみますと、これはgene amplificationを示しています。この図もよく見るといろいろな情報があります。解析対象の症例の7%の症例に何らかの変異がある、あるいは、解析対象のサンプルの7%のサンプルに何らかの異常があること、ドライバー変異もあれば、意義が不明の変異もあることも示されています。点変異の様なものもあれば他の遺伝子への融合や途中で翻訳が終わるtruncationになる変異もあります。

変異の頻度が比較的多いのが食道癌で、Amplificationの頻度が多い様です。

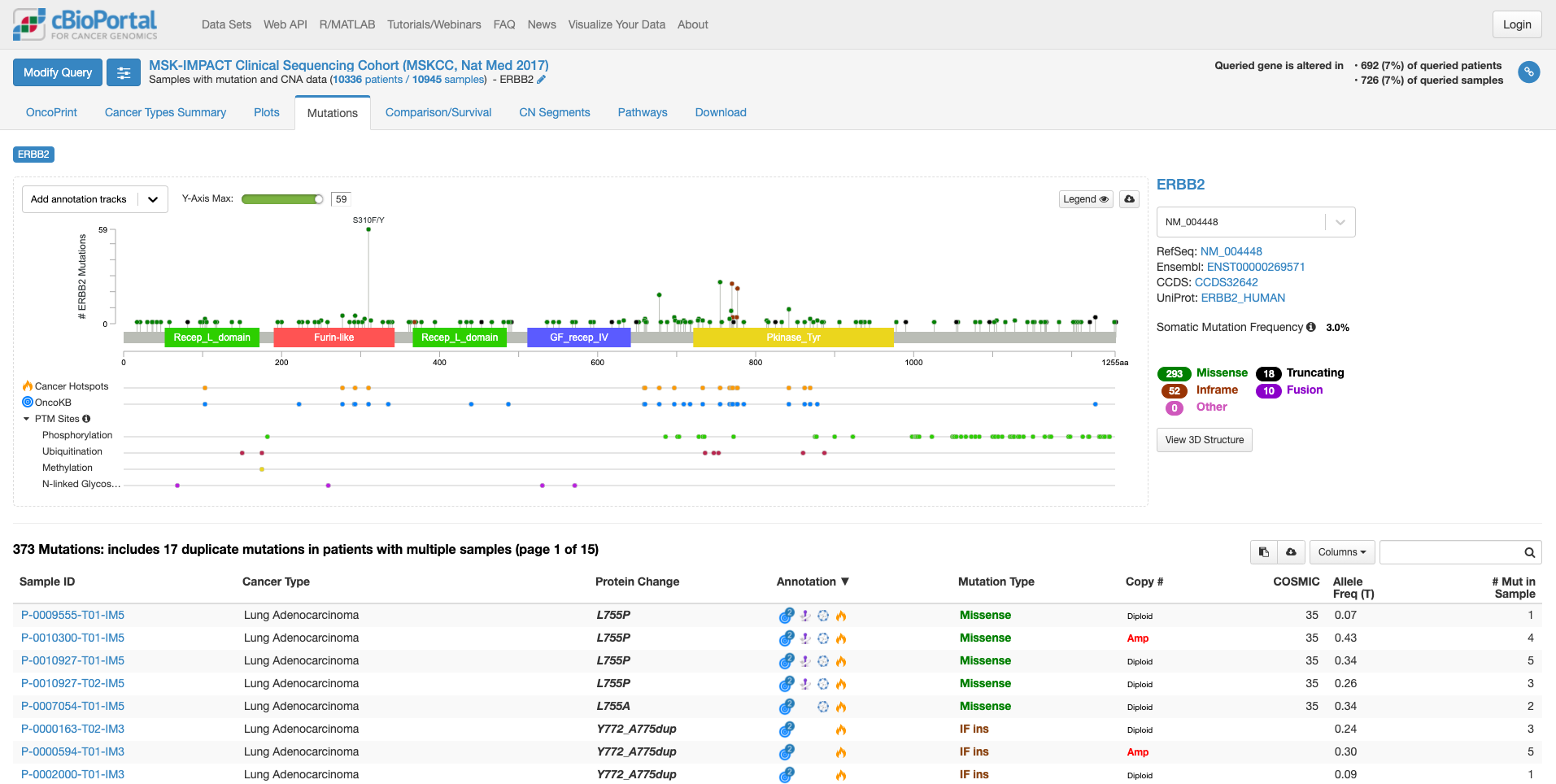

「Mutation」タブをクリックすると次の画面になります。

この画面では、遺伝子の中のどこに変異があるのか、ある場合は頻度も見て取れます。また、遺伝子の各ドメインが表示されています。たとえば、図の中で飛び出して目立っている、S310F/Yは310 番目のセリンがフェニルアラニンやタイロシンに変わる変異が報告されていることを示しています。その変異がある場所が翻訳後の蛋白質としてはFurin-likeドメインに存在することもわかります。そして、高い位置に丸があるのはその変異の頻度が多いことを示しています。この緑の丸をクリックすると、下の症例リストも変化します。

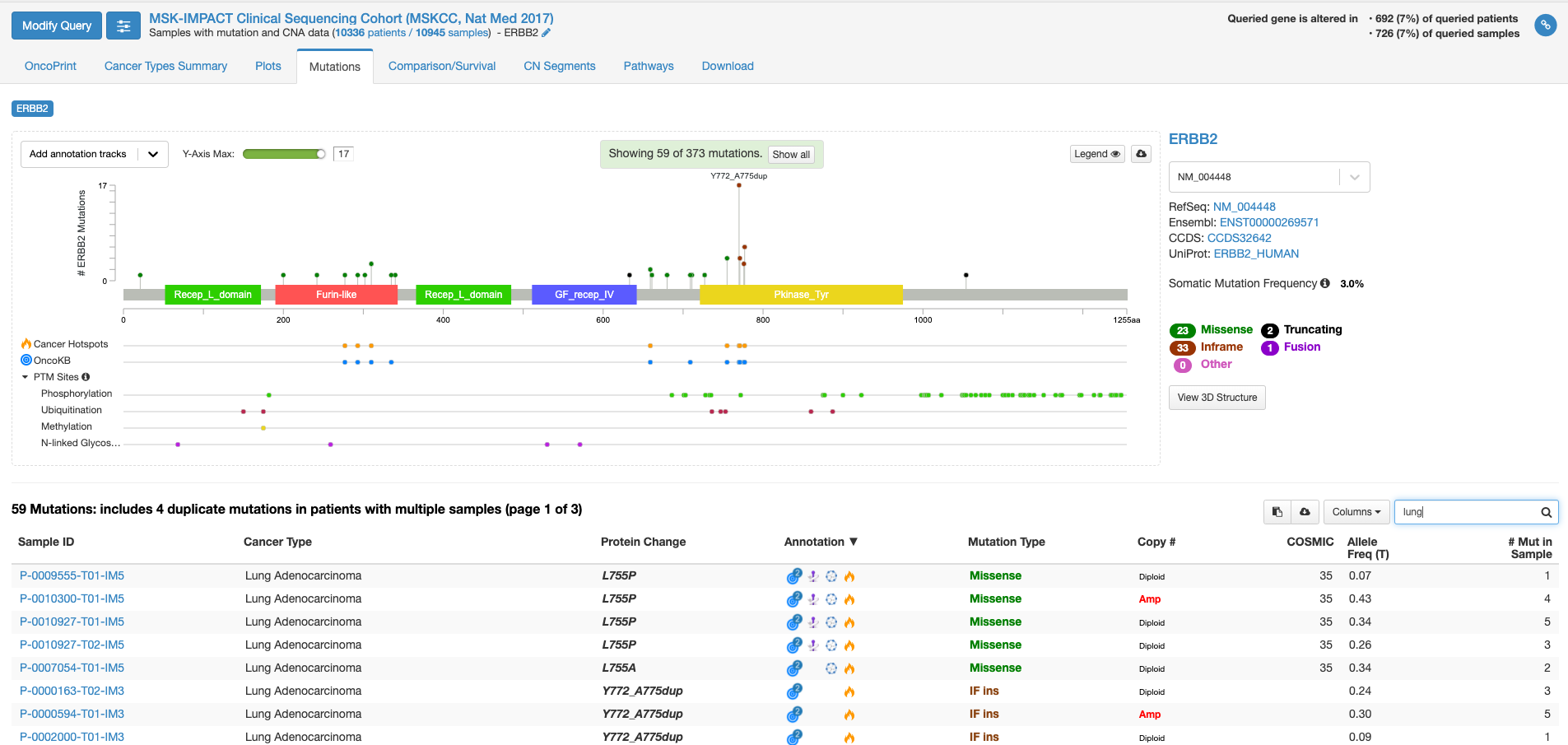

上図の状態で、右中程にあります虫メガネのアイコンのボックスに「lung」と入力してみますと、肺がんの情報に書き換えられます。(他のがん種の情報を除いた形で表示されます。)

全がん種の情報では変異の頻度が高かった310番目のアミノ酸残基の変異は目立たなくなりまして、代わりにY772_A775 dupという変異の頻度が目立っています。この変異があるのはタイロシンリン酸化酵素ドメインと呼ばれる部分になります。この変異をクリックして置いて、下の症例リストのアノテーションのアイコンにマウスをオーバーする(クリックはしない)と、この変異がhot spotにある事や、oncogenicの変異であることが表示されます。

虫メガネアイコンにBreastと入れて見ると今度は乳がんでの情報が表示されます。若干見た目は代わりますが、乳がんも肺がん同様に、タイロシンリン酸化酵素ドメインでの変異が多いことがわかります。一方、bladderと入力しますと、細胞外ドメインの変異が多く、細胞内のタイロシンリン酸化酵素ドメインの変異が相対的に少ないことがわかります。

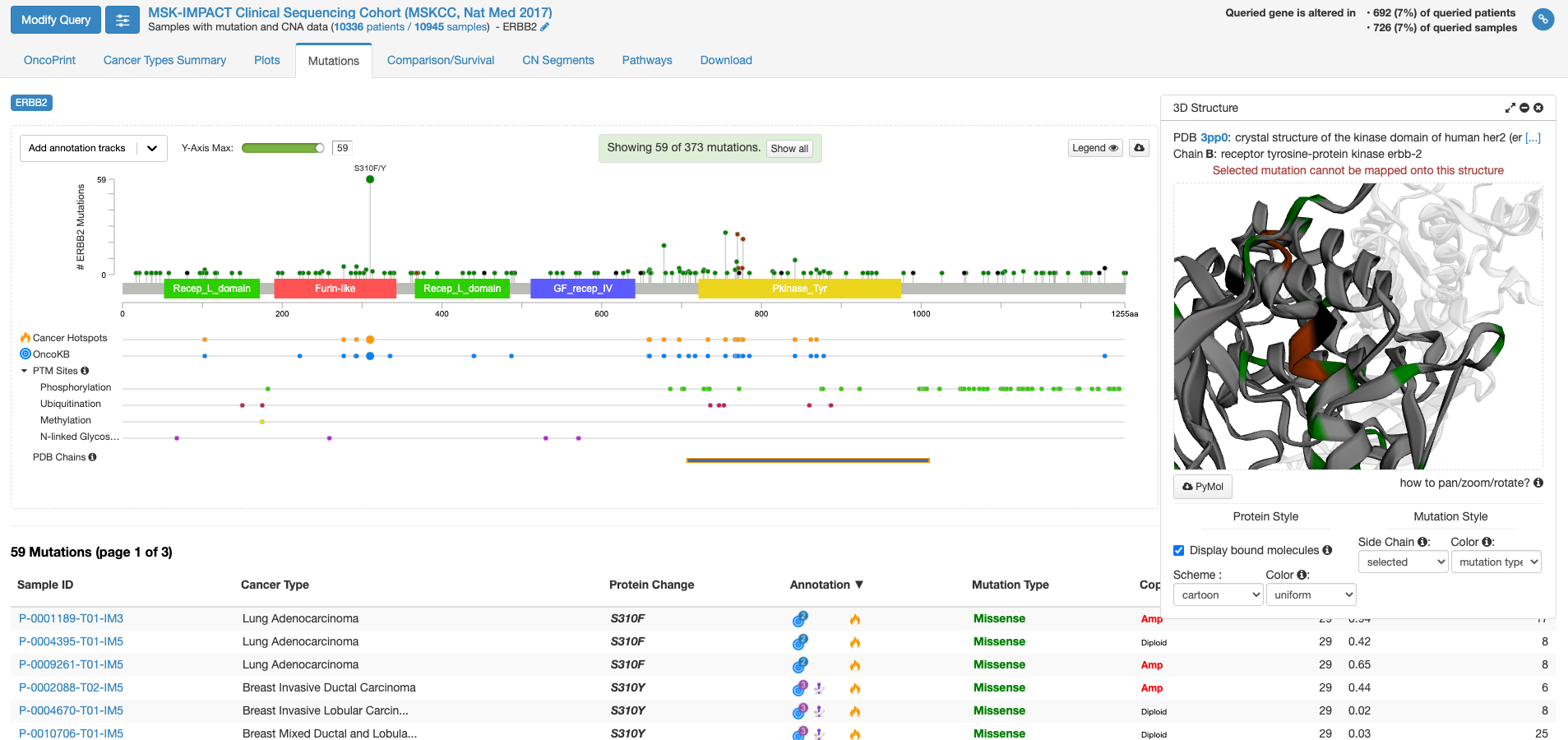

今度は右側の「view 3D structure」ボタンを押してみます。

PDB chains のあたりにマウスを持ってくると、PDBのどの立体構造情報で表示するかを選ぶことができる様になります。上図は一番上の行の右側(C末端側)の四角をクリックした状態です。右側の窓に立体構造が表示されまして、これをくりくり回したり拡大縮小して表示したりすることができます。グレーの中に緑やエンジの着色がなされている場所は変異が報告されている場所を示します。

他にも、機能がついていますがとりあえず変異に関しての面白そうな機能はこんなところです。

COVID19の原因ウイルスであるSARS-Cov-2のゲノム配列が公開されているのでとりあえず眺めてみました。こういうのは、ちょっと遺伝子を扱う研究的なことをやったことのある人にとっては、元ファイルを見た方が速いんでしょうけど。以前はアクセション番号とか言っていましたが、シーケンスのIDは次の通りです;NCBI Reference Sequence: NC_045512.2

約29.9千塩基とシーケンスは長いのでこのページの下の方に示すとして、主な構造です。GC% 約38%。11種類の遺伝子から12種類の成熟タンパク質が翻訳されると報告されています。上流(5′-末)から順に見ていきます。265塩基の5′-UTRに引き続いて出てくるタンパク質をコードしているとされる配列はORF1abとされています。

gene 266..21555

大きな遺伝子が設定されています

CDS join(266..13468,13468..21555)

ココは興味深いところで、13468までのコドンをtta aacと読んでおいて、一塩基分5’側に引き返して、その最後のcを次のコドンの頭と読み替えています。(2度Cを読むような感じ)

13451 gca[A]..caa[Q]..tcg[S]..ttt[F]..tta[L]..aac[N]..ggg[G]..ttt[F]..gcg[A]..gtg[V].. taa[stop] ->

13451 gca[A]..caa[Q]..tcg[S]..ttt[F]..tta[L]..aac[N]..cgg[R]..gtt[V]..tgc[C]..ggt[G]..gta[V]..agt[S]..gca[A]…

ribosomal_slippage

note="pp1ab; translated by -1 ribosomal frameshift"

と注釈が入っています。リボゾームがスリップして、フレームシフトを起こすようです。この現象は2005年にはコロナウイルスで報告があります。1

ORF1 a/bは大きなペプチドで、複数のたんぱく質(mature protein)をコードしています。その機能は、良く判りません。

ORF1 a/bに続く遺伝子は「S」 スパイクタンパク質をコードする遺伝子です。このタンパク質がACE2と結合してヒト細胞に侵入するのに大きな役割を担っていると報告されています。

gene 21563..25384

gene="S"

アミノ酸配列です

/translation="MFVFLVLLPLVSSQCVNLTTRTQLPPAYTNSFTRGVYYPDKVFR SSVLHSTQDLFLPFFSNVTWFHAIHVSGTNGTKRFDNPVLPFNDGVYFASTEKSNIIR GWIFGTTLDSKTQSLLIVNNATNVVIKVCEFQFCNDPFLGVYYHKNNKSWMESEFRVY SSANNCTFEYVSQPFLMDLEGKQGNFKNLREFVFKNIDGYFKIYSKHTPINLVRDLPQ GFSALEPLVDLPIGINITRFQTLLALHRSYLTPGDSSSGWTAGAAAYYVGYLQPRTFL LKYNENGTITDAVDCALDPLSETKCTLKSFTVEKGIYQTSNFRVQPTESIVRFPNITN LCPFGEVFNATRFASVYAWNRKRISNCVADYSVLYNSASFSTFKCYGVSPTKLNDLCF TNVYADSFVIRGDEVRQIAPGQTGKIADYNYKLPDDFTGCVIAWNSNNLDSKVGGNYN YLYRLFRKSNLKPFERDISTEIYQAGSTPCNGVEGFNCYFPLQSYGFQPTNGVGYQPY RVVVLSFELLHAPATVCGPKKSTNLVKNKCVNFNFNGLTGTGVLTESNKKFLPFQQFG RDIADTTDAVRDPQTLEILDITPCSFGGVSVITPGTNTSNQVAVLYQDVNCTEVPVAI HADQLTPTWRVYSTGSNVFQTRAGCLIGAEHVNNSYECDIPIGAGICASYQTQTNSPR RARSVASQSIIAYTMSLGAENSVAYSNNSIAIPTNFTISVTTEILPVSMTKTSVDCTM YICGDSTECSNLLLQYGSFCTQLNRALTGIAVEQDKNTQEVFAQVKQIYKTPPIKDFG GFNFSQILPDPSKPSKRSFIEDLLFNKVTLADAGFIKQYGDCLGDIAARDLICAQKFN GLTVLPPLLTDEMIAQYTSALLAGTITSGWTFGAGAALQIPFAMQMAYRFNGIGVTQN VLYENQKLIANQFNSAIGKIQDSLSSTASALGKLQDVVNQNAQALNTLVKQLSSNFGA ISSVLNDILSRLDKVEAEVQIDRLITGRLQSLQTYVTQQLIRAAEIRASANLAATKMS ECVLGQSKRVDFCGKGYHLMSFPQSAPHGVVFLHVTYVPAQEKNFTTAPAICHDGKAH FPREGVFVSNGTHWFVTQRNFYEPQIITTDNTFVSGNCDVVIGIVNNTVYDPLQPELD SFKEELDKYFKNHTSPDVDLGDISGINASVVNIQKEIDRLNEVAKNLNESLIDLQELG KYEQYIKWPWYIWLGFIAGLIAIVMVTIMLCCMTSCCSCLKGCCSCGSCCKFDEDDSE PVLKGVKLHYT

Sに続く配列がORF3a

gene 25393..26220

/gene="ORF3a"

ORF3aに続くのは、SARS-Cov-2 ウイルスの構造タンパク質であるエンベロープをコードする遺伝子です。

gene 26245..26472

/gene="E"

アミノ酸配列です

/translation="MYSFVSEETGTLIVNSVLLFLAFVVFLLVTLAILTALRLCAYCC NIVNVSLVKPSFYVYSRVKNLNSSRVPDLLV"

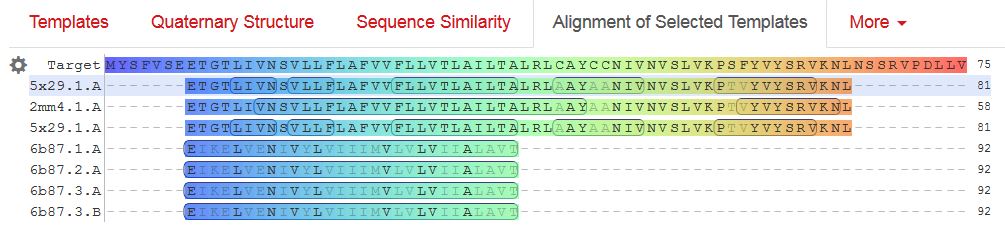

Envelopeにはあまり、面白そうなリガンドが含まれる相同性のある構造が報告されていないようなので、アライメントした結果をここに貼っておきます。

gene E に続くのは、gene Mです。

gene 26523..27191

/gene="M"

アミノ酸配列です。

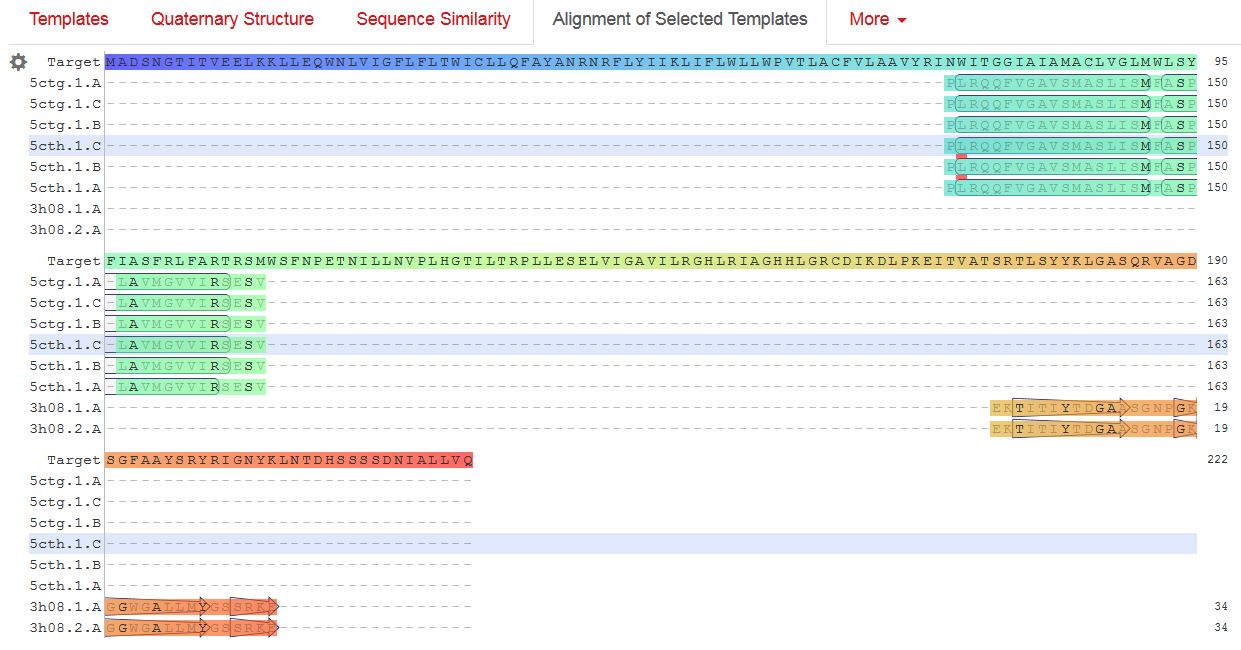

/translation="MADSNGTITVEELKKLLEQWNLVIGFLFLTWICLLQFAYANRNR FLYIIKLIFLWLLWPVTLACFVLAAVYRINWITGGIAIAMACLVGLMWLSYFIASFRL FARTRSMWSFNPETNILLNVPLHGTILTRPLLESELVIGAVILRGHLRIAGHHLGRCD IKDLPKEITVATSRTLSYYKLGASQRVAGDSGFAAYSRYRIGNYKLNTDHSSSSDNIA LLVQ"

M-proteinで相同性検索すると、リガンドが含まれる構造が8種類あるのですが、既知の構造とSARS-CoV-2のM-proteinで相同性がある範囲が狭いのと、テンプレートのリガンドの位置が気に入らないので、これ以上深追いしないという事で、アライメントを公開します。

gene Mに続くのは、ORF6, 7a, 7b, 8です。

gene 27202..27387

/gene="ORF6"

gene 27394..27759

/gene="ORF7a"

gene 27756..27887

/gene="ORF7b"

gene 27894..28259

/gene="ORF8"

ORF 8につづくのは、gene Nで、これはnucleocapsid phosphoproteinをコードします。

gene 28274..29533

/gene="N"

アミノ酸残基です

/translation="MSDNGPQNQRNAPRITFGGPSDSTGSNQNGERSGARSKQRRPQG LPNNTASWFTALTQHGKEDLKFPRGQGVPINTNSSPDDQIGYYRRATRRIRGGDGKMK DLSPRWYFYYLGTGPEAGLPYGANKDGIIWVATEGALNTPKDHIGTRNPANNAAIVLQ LPQGTTLPKGFYAEGSRGGSQASSRSSSRSRNSSRNSTPGSSRGTSPARMAGNGGDAA LALLLLDRLNQLESKMSGKGQQQQGQTVTKKSAAEASKKPRQKRTATKAYNVTQAFGR RGPEQTQGNFGDQELIRQGTDYKHWPQIAQFAPSASAFFGMSRIGMEVTPSGTWLTYT GAIKLDDKDPNFKDQVILLNKHIDAYKTFPPTEPKKDKKKKADETQALPQRQKKQQTV TLLPAADLDDFSKQLQQSMSSADSTQA"

gene Nに続いて出てくる読み枠が最も3′-末のORF10です。

gene 29558..29674

/gene="ORF10"

これに引き続いて3′-UTR配列が来ます。

ORIGIN

1 attaaaggtt tataccttcc caggtaacaa accaaccaac tttcgatctc ttgtagatct

61 gttctctaaa cgaactttaa aatctgtgtg gctgtcactc ggctgcatgc ttagtgcact

121 cacgcagtat aattaataac taattactgt cgttgacagg acacgagtaa ctcgtctatc

181 ttctgcaggc tgcttacggt ttcgtccgtg ttgcagccga tcatcagcac atctaggttt

241 cgtccgggtg tgaccgaaag gtaagatgga gagccttgtc cctggtttca acgagaaaac

301 acacgtccaa ctcagtttgc ctgttttaca ggttcgcgac gtgctcgtac gtggctttgg

361 agactccgtg gaggaggtct tatcagaggc acgtcaacat cttaaagatg gcacttgtgg

421 cttagtagaa gttgaaaaag gcgttttgcc tcaacttgaa cagccctatg tgttcatcaa

481 acgttcggat gctcgaactg cacctcatgg tcatgttatg gttgagctgg tagcagaact

541 cgaaggcatt cagtacggtc gtagtggtga gacacttggt gtccttgtcc ctcatgtggg

601 cgaaatacca gtggcttacc gcaaggttct tcttcgtaag aacggtaata aaggagctgg

661 tggccatagt tacggcgccg atctaaagtc atttgactta ggcgacgagc ttggcactga

721 tccttatgaa gattttcaag aaaactggaa cactaaacat agcagtggtg ttacccgtga

781 actcatgcgt gagcttaacg gaggggcata cactcgctat gtcgataaca acttctgtgg

841 ccctgatggc taccctcttg agtgcattaa agaccttcta gcacgtgctg gtaaagcttc

901 atgcactttg tccgaacaac tggactttat tgacactaag aggggtgtat actgctgccg

961 tgaacatgag catgaaattg cttggtacac ggaacgttct gaaaagagct atgaattgca

1021 gacacctttt gaaattaaat tggcaaagaa atttgacacc ttcaatgggg aatgtccaaa

1081 ttttgtattt cccttaaatt ccataatcaa gactattcaa ccaagggttg aaaagaaaaa

1141 gcttgatggc tttatgggta gaattcgatc tgtctatcca gttgcgtcac caaatgaatg

1201 caaccaaatg tgcctttcaa ctctcatgaa gtgtgatcat tgtggtgaaa cttcatggca

1261 gacgggcgat tttgttaaag ccacttgcga attttgtggc actgagaatt tgactaaaga

1321 aggtgccact acttgtggtt acttacccca aaatgctgtt gttaaaattt attgtccagc

1381 atgtcacaat tcagaagtag gacctgagca tagtcttgcc gaataccata atgaatctgg

1441 cttgaaaacc attcttcgta agggtggtcg cactattgcc tttggaggct gtgtgttctc

1501 ttatgttggt tgccataaca agtgtgccta ttgggttcca cgtgctagcg ctaacatagg

1561 ttgtaaccat acaggtgttg ttggagaagg ttccgaaggt cttaatgaca accttcttga

1621 aatactccaa aaagagaaag tcaacatcaa tattgttggt gactttaaac ttaatgaaga

1681 gatcgccatt attttggcat ctttttctgc ttccacaagt gcttttgtgg aaactgtgaa

1741 aggtttggat tataaagcat tcaaacaaat tgttgaatcc tgtggtaatt ttaaagttac

1801 aaaaggaaaa gctaaaaaag gtgcctggaa tattggtgaa cagaaatcaa tactgagtcc

1861 tctttatgca tttgcatcag aggctgctcg tgttgtacga tcaattttct cccgcactct

1921 tgaaactgct caaaattctg tgcgtgtttt acagaaggcc gctataacaa tactagatgg

1981 aatttcacag tattcactga gactcattga tgctatgatg ttcacatctg atttggctac

2041 taacaatcta gttgtaatgg cctacattac aggtggtgtt gttcagttga cttcgcagtg

2101 gctaactaac atctttggca ctgtttatga aaaactcaaa cccgtccttg attggcttga

2161 agagaagttt aaggaaggtg tagagtttct tagagacggt tgggaaattg ttaaatttat

2221 ctcaacctgt gcttgtgaaa ttgtcggtgg acaaattgtc acctgtgcaa aggaaattaa

2281 ggagagtgtt cagacattct ttaagcttgt aaataaattt ttggctttgt gtgctgactc

2341 tatcattatt ggtggagcta aacttaaagc cttgaattta ggtgaaacat ttgtcacgca

2401 ctcaaaggga ttgtacagaa agtgtgttaa atccagagaa gaaactggcc tactcatgcc

2461 tctaaaagcc ccaaaagaaa ttatcttctt agagggagaa acacttccca cagaagtgtt

2521 aacagaggaa gttgtcttga aaactggtga tttacaacca ttagaacaac ctactagtga

2581 agctgttgaa gctccattgg ttggtacacc agtttgtatt aacgggctta tgttgctcga

2641 aatcaaagac acagaaaagt actgtgccct tgcacctaat atgatggtaa caaacaatac

2701 cttcacactc aaaggcggtg caccaacaaa ggttactttt ggtgatgaca ctgtgataga

2761 agtgcaaggt tacaagagtg tgaatatcac ttttgaactt gatgaaagga ttgataaagt

2821 acttaatgag aagtgctctg cctatacagt tgaactcggt acagaagtaa atgagttcgc

2881 ctgtgttgtg gcagatgctg tcataaaaac tttgcaacca gtatctgaat tacttacacc

2941 actgggcatt gatttagatg agtggagtat ggctacatac tacttatttg atgagtctgg

3001 tgagtttaaa ttggcttcac atatgtattg ttctttctac cctccagatg aggatgaaga

3061 agaaggtgat tgtgaagaag aagagtttga gccatcaact caatatgagt atggtactga

3121 agatgattac caaggtaaac ctttggaatt tggtgccact tctgctgctc ttcaacctga

3181 agaagagcaa gaagaagatt ggttagatga tgatagtcaa caaactgttg gtcaacaaga

3241 cggcagtgag gacaatcaga caactactat tcaaacaatt gttgaggttc aacctcaatt

3301 agagatggaa cttacaccag ttgttcagac tattgaagtg aatagtttta gtggttattt

3361 aaaacttact gacaatgtat acattaaaaa tgcagacatt gtggaagaag ctaaaaaggt

3421 aaaaccaaca gtggttgtta atgcagccaa tgtttacctt aaacatggag gaggtgttgc

3481 aggagcctta aataaggcta ctaacaatgc catgcaagtt gaatctgatg attacatagc

3541 tactaatgga ccacttaaag tgggtggtag ttgtgtttta agcggacaca atcttgctaa

3601 acactgtctt catgttgtcg gcccaaatgt taacaaaggt gaagacattc aacttcttaa

3661 gagtgcttat gaaaatttta atcagcacga agttctactt gcaccattat tatcagctgg

3721 tatttttggt gctgacccta tacattcttt aagagtttgt gtagatactg ttcgcacaaa

3781 tgtctactta gctgtctttg ataaaaatct ctatgacaaa cttgtttcaa gctttttgga

3841 aatgaagagt gaaaagcaag ttgaacaaaa gatcgctgag attcctaaag aggaagttaa

3901 gccatttata actgaaagta aaccttcagt tgaacagaga aaacaagatg ataagaaaat

3961 caaagcttgt gttgaagaag ttacaacaac tctggaagaa actaagttcc tcacagaaaa

4021 cttgttactt tatattgaca ttaatggcaa tcttcatcca gattctgcca ctcttgttag

4081 tgacattgac atcactttct taaagaaaga tgctccatat atagtgggtg atgttgttca

4141 agagggtgtt ttaactgctg tggttatacc tactaaaaag gctggtggca ctactgaaat

4201 gctagcgaaa gctttgagaa aagtgccaac agacaattat ataaccactt acccgggtca

4261 gggtttaaat ggttacactg tagaggaggc aaagacagtg cttaaaaagt gtaaaagtgc

4321 cttttacatt ctaccatcta ttatctctaa tgagaagcaa gaaattcttg gaactgtttc

4381 ttggaatttg cgagaaatgc ttgcacatgc agaagaaaca cgcaaattaa tgcctgtctg

4441 tgtggaaact aaagccatag tttcaactat acagcgtaaa tataagggta ttaaaataca

4501 agagggtgtg gttgattatg gtgctagatt ttacttttac accagtaaaa caactgtagc

4561 gtcacttatc aacacactta acgatctaaa tgaaactctt gttacaatgc cacttggcta

4621 tgtaacacat ggcttaaatt tggaagaagc tgctcggtat atgagatctc tcaaagtgcc

4681 agctacagtt tctgtttctt cacctgatgc tgttacagcg tataatggtt atcttacttc

4741 ttcttctaaa acacctgaag aacattttat tgaaaccatc tcacttgctg gttcctataa

4801 agattggtcc tattctggac aatctacaca actaggtata gaatttctta agagaggtga

4861 taaaagtgta tattacacta gtaatcctac cacattccac ctagatggtg aagttatcac

4921 ctttgacaat cttaagacac ttctttcttt gagagaagtg aggactatta aggtgtttac

4981 aacagtagac aacattaacc tccacacgca agttgtggac atgtcaatga catatggaca

5041 acagtttggt ccaacttatt tggatggagc tgatgttact aaaataaaac ctcataattc

5101 acatgaaggt aaaacatttt atgttttacc taatgatgac actctacgtg ttgaggcttt

5161 tgagtactac cacacaactg atcctagttt tctgggtagg tacatgtcag cattaaatca

5221 cactaaaaag tggaaatacc cacaagttaa tggtttaact tctattaaat gggcagataa

5281 caactgttat cttgccactg cattgttaac actccaacaa atagagttga agtttaatcc

5341 acctgctcta caagatgctt attacagagc aagggctggt gaagctgcta acttttgtgc

5401 acttatctta gcctactgta ataagacagt aggtgagtta ggtgatgtta gagaaacaat

5461 gagttacttg tttcaacatg ccaatttaga ttcttgcaaa agagtcttga acgtggtgtg

5521 taaaacttgt ggacaacagc agacaaccct taagggtgta gaagctgtta tgtacatggg

5581 cacactttct tatgaacaat ttaagaaagg tgttcagata ccttgtacgt gtggtaaaca

5641 agctacaaaa tatctagtac aacaggagtc accttttgtt atgatgtcag caccacctgc

5701 tcagtatgaa cttaagcatg gtacatttac ttgtgctagt gagtacactg gtaattacca

5761 gtgtggtcac tataaacata taacttctaa agaaactttg tattgcatag acggtgcttt

5821 acttacaaag tcctcagaat acaaaggtcc tattacggat gttttctaca aagaaaacag

5881 ttacacaaca accataaaac cagttactta taaattggat ggtgttgttt gtacagaaat

5941 tgaccctaag ttggacaatt attataagaa agacaattct tatttcacag agcaaccaat

6001 tgatcttgta ccaaaccaac catatccaaa cgcaagcttc gataatttta agtttgtatg

6061 tgataatatc aaatttgctg atgatttaaa ccagttaact ggttataaga aacctgcttc

6121 aagagagctt aaagttacat ttttccctga cttaaatggt gatgtggtgg ctattgatta

6181 taaacactac acaccctctt ttaagaaagg agctaaattg ttacataaac ctattgtttg

6241 gcatgttaac aatgcaacta ataaagccac gtataaacca aatacctggt gtatacgttg

6301 tctttggagc acaaaaccag ttgaaacatc aaattcgttt gatgtactga agtcagagga

6361 cgcgcaggga atggataatc ttgcctgcga agatctaaaa ccagtctctg aagaagtagt

6421 ggaaaatcct accatacaga aagacgttct tgagtgtaat gtgaaaacta ccgaagttgt

6481 aggagacatt atacttaaac cagcaaataa tagtttaaaa attacagaag aggttggcca

6541 cacagatcta atggctgctt atgtagacaa ttctagtctt actattaaga aacctaatga

6601 attatctaga gtattaggtt tgaaaaccct tgctactcat ggtttagctg ctgttaatag

6661 tgtcccttgg gatactatag ctaattatgc taagcctttt cttaacaaag ttgttagtac

6721 aactactaac atagttacac ggtgtttaaa ccgtgtttgt actaattata tgccttattt

6781 ctttacttta ttgctacaat tgtgtacttt tactagaagt acaaattcta gaattaaagc

6841 atctatgccg actactatag caaagaatac tgttaagagt gtcggtaaat tttgtctaga

6901 ggcttcattt aattatttga agtcacctaa tttttctaaa ctgataaata ttataatttg

6961 gtttttacta ttaagtgttt gcctaggttc tttaatctac tcaaccgctg ctttaggtgt

7021 tttaatgtct aatttaggca tgccttctta ctgtactggt tacagagaag gctatttgaa

7081 ctctactaat gtcactattg caacctactg tactggttct ataccttgta gtgtttgtct

7141 tagtggttta gattctttag acacctatcc ttctttagaa actatacaaa ttaccatttc

7201 atcttttaaa tgggatttaa ctgcttttgg cttagttgca gagtggtttt tggcatatat

7261 tcttttcact aggtttttct atgtacttgg attggctgca atcatgcaat tgtttttcag

7321 ctattttgca gtacatttta ttagtaattc ttggcttatg tggttaataa ttaatcttgt

7381 acaaatggcc ccgatttcag ctatggttag aatgtacatc ttctttgcat cattttatta

7441 tgtatggaaa agttatgtgc atgttgtaga cggttgtaat tcatcaactt gtatgatgtg

7501 ttacaaacgt aatagagcaa caagagtcga atgtacaact attgttaatg gtgttagaag

7561 gtccttttat gtctatgcta atggaggtaa aggcttttgc aaactacaca attggaattg

7621 tgttaattgt gatacattct gtgctggtag tacatttatt agtgatgaag ttgcgagaga

7681 cttgtcacta cagtttaaaa gaccaataaa tcctactgac cagtcttctt acatcgttga

7741 tagtgttaca gtgaagaatg gttccatcca tctttacttt gataaagctg gtcaaaagac

7801 ttatgaaaga cattctctct ctcattttgt taacttagac aacctgagag ctaataacac

7861 taaaggttca ttgcctatta atgttatagt ttttgatggt aaatcaaaat gtgaagaatc

7921 atctgcaaaa tcagcgtctg tttactacag tcagcttatg tgtcaaccta tactgttact

7981 agatcaggca ttagtgtctg atgttggtga tagtgcggaa gttgcagtta aaatgtttga

8041 tgcttacgtt aatacgtttt catcaacttt taacgtacca atggaaaaac tcaaaacact

8101 agttgcaact gcagaagctg aacttgcaaa gaatgtgtcc ttagacaatg tcttatctac

8161 ttttatttca gcagctcggc aagggtttgt tgattcagat gtagaaacta aagatgttgt

8221 tgaatgtctt aaattgtcac atcaatctga catagaagtt actggcgata gttgtaataa

8281 ctatatgctc acctataaca aagttgaaaa catgacaccc cgtgaccttg gtgcttgtat

8341 tgactgtagt gcgcgtcata ttaatgcgca ggtagcaaaa agtcacaaca ttgctttgat

8401 atggaacgtt aaagatttca tgtcattgtc tgaacaacta cgaaaacaaa tacgtagtgc

8461 tgctaaaaag aataacttac cttttaagtt gacatgtgca actactagac aagttgttaa

8521 tgttgtaaca acaaagatag cacttaaggg tggtaaaatt gttaataatt ggttgaagca

8581 gttaattaaa gttacacttg tgttcctttt tgttgctgct attttctatt taataacacc

8641 tgttcatgtc atgtctaaac atactgactt ttcaagtgaa atcataggat acaaggctat

8701 tgatggtggt gtcactcgtg acatagcatc tacagatact tgttttgcta acaaacatgc

8761 tgattttgac acatggttta gccagcgtgg tggtagttat actaatgaca aagcttgccc

8821 attgattgct gcagtcataa caagagaagt gggttttgtc gtgcctggtt tgcctggcac

8881 gatattacgc acaactaatg gtgacttttt gcatttctta cctagagttt ttagtgcagt

8941 tggtaacatc tgttacacac catcaaaact tatagagtac actgactttg caacatcagc

9001 ttgtgttttg gctgctgaat gtacaatttt taaagatgct tctggtaagc cagtaccata

9061 ttgttatgat accaatgtac tagaaggttc tgttgcttat gaaagtttac gccctgacac

9121 acgttatgtg ctcatggatg gctctattat tcaatttcct aacacctacc ttgaaggttc

9181 tgttagagtg gtaacaactt ttgattctga gtactgtagg cacggcactt gtgaaagatc

9241 agaagctggt gtttgtgtat ctactagtgg tagatgggta cttaacaatg attattacag

9301 atctttacca ggagttttct gtggtgtaga tgctgtaaat ttacttacta atatgtttac

9361 accactaatt caacctattg gtgctttgga catatcagca tctatagtag ctggtggtat

9421 tgtagctatc gtagtaacat gccttgccta ctattttatg aggtttagaa gagcttttgg

9481 tgaatacagt catgtagttg cctttaatac tttactattc cttatgtcat tcactgtact

9541 ctgtttaaca ccagtttact cattcttacc tggtgtttat tctgttattt acttgtactt

9601 gacattttat cttactaatg atgtttcttt tttagcacat attcagtgga tggttatgtt

9661 cacaccttta gtacctttct ggataacaat tgcttatatc atttgtattt ccacaaagca

9721 tttctattgg ttctttagta attacctaaa gagacgtgta gtctttaatg gtgtttcctt

9781 tagtactttt gaagaagctg cgctgtgcac ctttttgtta aataaagaaa tgtatctaaa

9841 gttgcgtagt gatgtgctat tacctcttac gcaatataat agatacttag ctctttataa

9901 taagtacaag tattttagtg gagcaatgga tacaactagc tacagagaag ctgcttgttg

9961 tcatctcgca aaggctctca atgacttcag taactcaggt tctgatgttc tttaccaacc

10021 accacaaacc tctatcacct cagctgtttt gcagagtggt tttagaaaaa tggcattccc

10081 atctggtaaa gttgagggtt gtatggtaca agtaacttgt ggtacaacta cacttaacgg

10141 tctttggctt gatgacgtag tttactgtcc aagacatgtg atctgcacct ctgaagacat

10201 gcttaaccct aattatgaag atttactcat tcgtaagtct aatcataatt tcttggtaca

10261 ggctggtaat gttcaactca gggttattgg acattctatg caaaattgtg tacttaagct

10321 taaggttgat acagccaatc ctaagacacc taagtataag tttgttcgca ttcaaccagg

10381 acagactttt tcagtgttag cttgttacaa tggttcacca tctggtgttt accaatgtgc

10441 tatgaggccc aatttcacta ttaagggttc attccttaat ggttcatgtg gtagtgttgg

10501 ttttaacata gattatgact gtgtctcttt ttgttacatg caccatatgg aattaccaac

10561 tggagttcat gctggcacag acttagaagg taacttttat ggaccttttg ttgacaggca

10621 aacagcacaa gcagctggta cggacacaac tattacagtt aatgttttag cttggttgta

10681 cgctgctgtt ataaatggag acaggtggtt tctcaatcga tttaccacaa ctcttaatga

10741 ctttaacctt gtggctatga agtacaatta tgaacctcta acacaagacc atgttgacat

10801 actaggacct ctttctgctc aaactggaat tgccgtttta gatatgtgtg cttcattaaa

10861 agaattactg caaaatggta tgaatggacg taccatattg ggtagtgctt tattagaaga

10921 tgaatttaca ccttttgatg ttgttagaca atgctcaggt gttactttcc aaagtgcagt

10981 gaaaagaaca atcaagggta cacaccactg gttgttactc acaattttga cttcactttt

11041 agttttagtc cagagtactc aatggtcttt gttctttttt ttgtatgaaa atgccttttt

11101 accttttgct atgggtatta ttgctatgtc tgcttttgca atgatgtttg tcaaacataa

11161 gcatgcattt ctctgtttgt ttttgttacc ttctcttgcc actgtagctt attttaatat

11221 ggtctatatg cctgctagtt gggtgatgcg tattatgaca tggttggata tggttgatac

11281 tagtttgtct ggttttaagc taaaagactg tgttatgtat gcatcagctg tagtgttact

11341 aatccttatg acagcaagaa ctgtgtatga tgatggtgct aggagagtgt ggacacttat

11401 gaatgtcttg acactcgttt ataaagttta ttatggtaat gctttagatc aagccatttc

11461 catgtgggct cttataatct ctgttacttc taactactca ggtgtagtta caactgtcat

11521 gtttttggcc agaggtattg tttttatgtg tgttgagtat tgccctattt tcttcataac

11581 tggtaataca cttcagtgta taatgctagt ttattgtttc ttaggctatt tttgtacttg

11641 ttactttggc ctcttttgtt tactcaaccg ctactttaga ctgactcttg gtgtttatga

11701 ttacttagtt tctacacagg agtttagata tatgaattca cagggactac tcccacccaa

11761 gaatagcata gatgccttca aactcaacat taaattgttg ggtgttggtg gcaaaccttg

11821 tatcaaagta gccactgtac agtctaaaat gtcagatgta aagtgcacat cagtagtctt

11881 actctcagtt ttgcaacaac tcagagtaga atcatcatct aaattgtggg ctcaatgtgt

11941 ccagttacac aatgacattc tcttagctaa agatactact gaagcctttg aaaaaatggt

12001 ttcactactt tctgttttgc tttccatgca gggtgctgta gacataaaca agctttgtga

12061 agaaatgctg gacaacaggg caaccttaca agctatagcc tcagagttta gttcccttcc

12121 atcatatgca gcttttgcta ctgctcaaga agcttatgag caggctgttg ctaatggtga

12181 ttctgaagtt gttcttaaaa agttgaagaa gtctttgaat gtggctaaat ctgaatttga

12241 ccgtgatgca gccatgcaac gtaagttgga aaagatggct gatcaagcta tgacccaaat

12301 gtataaacag gctagatctg aggacaagag ggcaaaagtt actagtgcta tgcagacaat

12361 gcttttcact atgcttagaa agttggataa tgatgcactc aacaacatta tcaacaatgc

12421 aagagatggt tgtgttccct tgaacataat acctcttaca acagcagcca aactaatggt

12481 tgtcatacca gactataaca catataaaaa tacgtgtgat ggtacaacat ttacttatgc

12541 atcagcattg tgggaaatcc aacaggttgt agatgcagat agtaaaattg ttcaacttag

12601 tgaaattagt atggacaatt cacctaattt agcatggcct cttattgtaa cagctttaag

12661 ggccaattct gctgtcaaat tacagaataa tgagcttagt cctgttgcac tacgacagat

12721 gtcttgtgct gccggtacta cacaaactgc ttgcactgat gacaatgcgt tagcttacta

12781 caacacaaca aagggaggta ggtttgtact tgcactgtta tccgatttac aggatttgaa

12841 atgggctaga ttccctaaga gtgatggaac tggtactatc tatacagaac tggaaccacc

12901 ttgtaggttt gttacagaca cacctaaagg tcctaaagtg aagtatttat actttattaa

12961 aggattaaac aacctaaata gaggtatggt acttggtagt ttagctgcca cagtacgtct

13021 acaagctggt aatgcaacag aagtgcctgc caattcaact gtattatctt tctgtgcttt

13081 tgctgtagat gctgctaaag cttacaaaga ttatctagct agtgggggac aaccaatcac

13141 taattgtgtt aagatgttgt gtacacacac tggtactggt caggcaataa cagttacacc

13201 ggaagccaat atggatcaag aatcctttgg tggtgcatcg tgttgtctgt actgccgttg

13261 ccacatagat catccaaatc ctaaaggatt ttgtgactta aaaggtaagt atgtacaaat

13321 acctacaact tgtgctaatg accctgtggg ttttacactt aaaaacacag tctgtaccgt

13381 ctgcggtatg tggaaaggtt atggctgtag ttgtgatcaa ctccgcgaac ccatgcttca

13441 gtcagctgat gcacaatcgt ttttaaacgg gtttgcggtg taagtgcagc ccgtcttaca

13501 ccgtgcggca caggcactag tactgatgtc gtatacaggg cttttgacat ctacaatgat

13561 aaagtagctg gttttgctaa attcctaaaa actaattgtt gtcgcttcca agaaaaggac

13621 gaagatgaca atttaattga ttcttacttt gtagttaaga gacacacttt ctctaactac

13681 caacatgaag aaacaattta taatttactt aaggattgtc cagctgttgc taaacatgac

13741 ttctttaagt ttagaataga cggtgacatg gtaccacata tatcacgtca acgtcttact

13801 aaatacacaa tggcagacct cgtctatgct ttaaggcatt ttgatgaagg taattgtgac

13861 acattaaaag aaatacttgt cacatacaat tgttgtgatg atgattattt caataaaaag

13921 gactggtatg attttgtaga aaacccagat atattacgcg tatacgccaa cttaggtgaa

13981 cgtgtacgcc aagctttgtt aaaaacagta caattctgtg atgccatgcg aaatgctggt

14041 attgttggtg tactgacatt agataatcaa gatctcaatg gtaactggta tgatttcggt

14101 gatttcatac aaaccacgcc aggtagtgga gttcctgttg tagattctta ttattcattg

14161 ttaatgccta tattaacctt gaccagggct ttaactgcag agtcacatgt tgacactgac

14221 ttaacaaagc cttacattaa gtgggatttg ttaaaatatg acttcacgga agagaggtta

14281 aaactctttg accgttattt taaatattgg gatcagacat accacccaaa ttgtgttaac

14341 tgtttggatg acagatgcat tctgcattgt gcaaacttta atgttttatt ctctacagtg

14401 ttcccaccta caagttttgg accactagtg agaaaaatat ttgttgatgg tgttccattt

14461 gtagtttcaa ctggatacca cttcagagag ctaggtgttg tacataatca ggatgtaaac

14521 ttacatagct ctagacttag ttttaaggaa ttacttgtgt atgctgctga ccctgctatg

14581 cacgctgctt ctggtaatct attactagat aaacgcacta cgtgcttttc agtagctgca

14641 cttactaaca atgttgcttt tcaaactgtc aaacccggta attttaacaa agacttctat

14701 gactttgctg tgtctaaggg tttctttaag gaaggaagtt ctgttgaatt aaaacacttc

14761 ttctttgctc aggatggtaa tgctgctatc agcgattatg actactatcg ttataatcta

14821 ccaacaatgt gtgatatcag acaactacta tttgtagttg aagttgttga taagtacttt

14881 gattgttacg atggtggctg tattaatgct aaccaagtca tcgtcaacaa cctagacaaa

14941 tcagctggtt ttccatttaa taaatggggt aaggctagac tttattatga ttcaatgagt

15001 tatgaggatc aagatgcact tttcgcatat acaaaacgta atgtcatccc tactataact

15061 caaatgaatc ttaagtatgc cattagtgca aagaatagag ctcgcaccgt agctggtgtc

15121 tctatctgta gtactatgac caatagacag tttcatcaaa aattattgaa atcaatagcc

15181 gccactagag gagctactgt agtaattgga acaagcaaat tctatggtgg ttggcacaac

15241 atgttaaaaa ctgtttatag tgatgtagaa aaccctcacc ttatgggttg ggattatcct

15301 aaatgtgata gagccatgcc taacatgctt agaattatgg cctcacttgt tcttgctcgc

15361 aaacatacaa cgtgttgtag cttgtcacac cgtttctata gattagctaa tgagtgtgct

15421 caagtattga gtgaaatggt catgtgtggc ggttcactat atgttaaacc aggtggaacc

15481 tcatcaggag atgccacaac tgcttatgct aatagtgttt ttaacatttg tcaagctgtc

15541 acggccaatg ttaatgcact tttatctact gatggtaaca aaattgccga taagtatgtc

15601 cgcaatttac aacacagact ttatgagtgt ctctatagaa atagagatgt tgacacagac

15661 tttgtgaatg agttttacgc atatttgcgt aaacatttct caatgatgat actctctgac

15721 gatgctgttg tgtgtttcaa tagcacttat gcatctcaag gtctagtggc tagcataaag

15781 aactttaagt cagttcttta ttatcaaaac aatgttttta tgtctgaagc aaaatgttgg

15841 actgagactg accttactaa aggacctcat gaattttgct ctcaacatac aatgctagtt

15901 aaacagggtg atgattatgt gtaccttcct tacccagatc catcaagaat cctaggggcc

15961 ggctgttttg tagatgatat cgtaaaaaca gatggtacac ttatgattga acggttcgtg

16021 tctttagcta tagatgctta cccacttact aaacatccta atcaggagta tgctgatgtc

16081 tttcatttgt acttacaata cataagaaag ctacatgatg agttaacagg acacatgtta

16141 gacatgtatt ctgttatgct tactaatgat aacacttcaa ggtattggga acctgagttt

16201 tatgaggcta tgtacacacc gcatacagtc ttacaggctg ttggggcttg tgttctttgc

16261 aattcacaga cttcattaag atgtggtgct tgcatacgta gaccattctt atgttgtaaa

16321 tgctgttacg accatgtcat atcaacatca cataaattag tcttgtctgt taatccgtat

16381 gtttgcaatg ctccaggttg tgatgtcaca gatgtgactc aactttactt aggaggtatg

16441 agctattatt gtaaatcaca taaaccaccc attagttttc cattgtgtgc taatggacaa

16501 gtttttggtt tatataaaaa tacatgtgtt ggtagcgata atgttactga ctttaatgca

16561 attgcaacat gtgactggac aaatgctggt gattacattt tagctaacac ctgtactgaa

16621 agactcaagc tttttgcagc agaaacgctc aaagctactg aggagacatt taaactgtct

16681 tatggtattg ctactgtacg tgaagtgctg tctgacagag aattacatct ttcatgggaa

16741 gttggtaaac ctagaccacc acttaaccga aattatgtct ttactggtta tcgtgtaact

16801 aaaaacagta aagtacaaat aggagagtac acctttgaaa aaggtgacta tggtgatgct

16861 gttgtttacc gaggtacaac aacttacaaa ttaaatgttg gtgattattt tgtgctgaca

16921 tcacatacag taatgccatt aagtgcacct acactagtgc cacaagagca ctatgttaga

16981 attactggct tatacccaac actcaatatc tcagatgagt tttctagcaa tgttgcaaat

17041 tatcaaaagg ttggtatgca aaagtattct acactccagg gaccacctgg tactggtaag

17101 agtcattttg ctattggcct agctctctac tacccttctg ctcgcatagt gtatacagct

17161 tgctctcatg ccgctgttga tgcactatgt gagaaggcat taaaatattt gcctatagat

17221 aaatgtagta gaattatacc tgcacgtgct cgtgtagagt gttttgataa attcaaagtg

17281 aattcaacat tagaacagta tgtcttttgt actgtaaatg cattgcctga gacgacagca

17341 gatatagttg tctttgatga aatttcaatg gccacaaatt atgatttgag tgttgtcaat

17401 gccagattac gtgctaagca ctatgtgtac attggcgacc ctgctcaatt acctgcacca

17461 cgcacattgc taactaaggg cacactagaa ccagaatatt tcaattcagt gtgtagactt

17521 atgaaaacta taggtccaga catgttcctc ggaacttgtc ggcgttgtcc tgctgaaatt

17581 gttgacactg tgagtgcttt ggtttatgat aataagctta aagcacataa agacaaatca

17641 gctcaatgct ttaaaatgtt ttataagggt gttatcacgc atgatgtttc atctgcaatt

17701 aacaggccac aaataggcgt ggtaagagaa ttccttacac gtaaccctgc ttggagaaaa

17761 gctgtcttta tttcacctta taattcacag aatgctgtag cctcaaagat tttgggacta

17821 ccaactcaaa ctgttgattc atcacagggc tcagaatatg actatgtcat attcactcaa

17881 accactgaaa cagctcactc ttgtaatgta aacagattta atgttgctat taccagagca

17941 aaagtaggca tactttgcat aatgtctgat agagaccttt atgacaagtt gcaatttaca

18001 agtcttgaaa ttccacgtag gaatgtggca actttacaag ctgaaaatgt aacaggactc

18061 tttaaagatt gtagtaaggt aatcactggg ttacatccta cacaggcacc tacacacctc

18121 agtgttgaca ctaaattcaa aactgaaggt ttatgtgttg acatacctgg catacctaag

18181 gacatgacct atagaagact catctctatg atgggtttta aaatgaatta tcaagttaat

18241 ggttacccta acatgtttat cacccgcgaa gaagctataa gacatgtacg tgcatggatt

18301 ggcttcgatg tcgaggggtg tcatgctact agagaagctg ttggtaccaa tttaccttta

18361 cagctaggtt tttctacagg tgttaaccta gttgctgtac ctacaggtta tgttgataca

18421 cctaataata cagatttttc cagagttagt gctaaaccac cgcctggaga tcaatttaaa

18481 cacctcatac cacttatgta caaaggactt ccttggaatg tagtgcgtat aaagattgta

18541 caaatgttaa gtgacacact taaaaatctc tctgacagag tcgtatttgt cttatgggca

18601 catggctttg agttgacatc tatgaagtat tttgtgaaaa taggacctga gcgcacctgt

18661 tgtctatgtg atagacgtgc cacatgcttt tccactgctt cagacactta tgcctgttgg

18721 catcattcta ttggatttga ttacgtctat aatccgttta tgattgatgt tcaacaatgg

18781 ggttttacag gtaacctaca aagcaaccat gatctgtatt gtcaagtcca tggtaatgca

18841 catgtagcta gttgtgatgc aatcatgact aggtgtctag ctgtccacga gtgctttgtt

18901 aagcgtgttg actggactat tgaatatcct ataattggtg atgaactgaa gattaatgcg

18961 gcttgtagaa aggttcaaca catggttgtt aaagctgcat tattagcaga caaattccca

19021 gttcttcacg acattggtaa ccctaaagct attaagtgtg tacctcaagc tgatgtagaa

19081 tggaagttct atgatgcaca gccttgtagt gacaaagctt ataaaataga agaattattc

19141 tattcttatg ccacacattc tgacaaattc acagatggtg tatgcctatt ttggaattgc

19201 aatgtcgata gatatcctgc taattccatt gtttgtagat ttgacactag agtgctatct

19261 aaccttaact tgcctggttg tgatggtggc agtttgtatg taaataaaca tgcattccac

19321 acaccagctt ttgataaaag tgcttttgtt aatttaaaac aattaccatt tttctattac

19381 tctgacagtc catgtgagtc tcatggaaaa caagtagtgt cagatataga ttatgtacca

19441 ctaaagtctg ctacgtgtat aacacgttgc aatttaggtg gtgctgtctg tagacatcat

19501 gctaatgagt acagattgta tctcgatgct tataacatga tgatctcagc tggctttagc

19561 ttgtgggttt acaaacaatt tgatacttat aacctctgga acacttttac aagacttcag

19621 agtttagaaa atgtggcttt taatgttgta aataagggac actttgatgg acaacagggt

19681 gaagtaccag tttctatcat taataacact gtttacacaa aagttgatgg tgttgatgta

19741 gaattgtttg aaaataaaac aacattacct gttaatgtag catttgagct ttgggctaag

19801 cgcaacatta aaccagtacc agaggtgaaa atactcaata atttgggtgt ggacattgct

19861 gctaatactg tgatctggga ctacaaaaga gatgctccag cacatatatc tactattggt

19921 gtttgttcta tgactgacat agccaagaaa ccaactgaaa cgatttgtgc accactcact

19981 gtcttttttg atggtagagt tgatggtcaa gtagacttat ttagaaatgc ccgtaatggt

20041 gttcttatta cagaaggtag tgttaaaggt ttacaaccat ctgtaggtcc caaacaagct

20101 agtcttaatg gagtcacatt aattggagaa gccgtaaaaa cacagttcaa ttattataag

20161 aaagttgatg gtgttgtcca acaattacct gaaacttact ttactcagag tagaaattta

20221 caagaattta aacccaggag tcaaatggaa attgatttct tagaattagc tatggatgaa

20281 ttcattgaac ggtataaatt agaaggctat gccttcgaac atatcgttta tggagatttt

20341 agtcatagtc agttaggtgg tttacatcta ctgattggac tagctaaacg ttttaaggaa

20401 tcaccttttg aattagaaga ttttattcct atggacagta cagttaaaaa ctatttcata

20461 acagatgcgc aaacaggttc atctaagtgt gtgtgttctg ttattgattt attacttgat

20521 gattttgttg aaataataaa atcccaagat ttatctgtag tttctaaggt tgtcaaagtg

20581 actattgact atacagaaat ttcatttatg ctttggtgta aagatggcca tgtagaaaca

20641 ttttacccaa aattacaatc tagtcaagcg tggcaaccgg gtgttgctat gcctaatctt

20701 tacaaaatgc aaagaatgct attagaaaag tgtgaccttc aaaattatgg tgatagtgca

20761 acattaccta aaggcataat gatgaatgtc gcaaaatata ctcaactgtg tcaatattta

20821 aacacattaa cattagctgt accctataat atgagagtta tacattttgg tgctggttct

20881 gataaaggag ttgcaccagg tacagctgtt ttaagacagt ggttgcctac gggtacgctg

20941 cttgtcgatt cagatcttaa tgactttgtc tctgatgcag attcaacttt gattggtgat

21001 tgtgcaactg tacatacagc taataaatgg gatctcatta ttagtgatat gtacgaccct

21061 aagactaaaa atgttacaaa agaaaatgac tctaaagagg gttttttcac ttacatttgt

21121 gggtttatac aacaaaagct agctcttgga ggttccgtgg ctataaagat aacagaacat

21181 tcttggaatg ctgatcttta taagctcatg ggacacttcg catggtggac agcctttgtt

21241 actaatgtga atgcgtcatc atctgaagca tttttaattg gatgtaatta tcttggcaaa

21301 ccacgcgaac aaatagatgg ttatgtcatg catgcaaatt acatattttg gaggaataca

21361 aatccaattc agttgtcttc ctattcttta tttgacatga gtaaatttcc ccttaaatta

21421 aggggtactg ctgttatgtc tttaaaagaa ggtcaaatca atgatatgat tttatctctt

21481 cttagtaaag gtagacttat aattagagaa aacaacagag ttgttatttc tagtgatgtt

21541 cttgttaaca actaaacgaa caatgtttgt ttttcttgtt ttattgccac tagtctctag

21601 tcagtgtgtt aatcttacaa ccagaactca attaccccct gcatacacta attctttcac

21661 acgtggtgtt tattaccctg acaaagtttt cagatcctca gttttacatt caactcagga

21721 cttgttctta cctttctttt ccaatgttac ttggttccat gctatacatg tctctgggac

21781 caatggtact aagaggtttg ataaccctgt cctaccattt aatgatggtg tttattttgc

21841 ttccactgag aagtctaaca taataagagg ctggattttt ggtactactt tagattcgaa

21901 gacccagtcc ctacttattg ttaataacgc tactaatgtt gttattaaag tctgtgaatt

21961 tcaattttgt aatgatccat ttttgggtgt ttattaccac aaaaacaaca aaagttggat

22021 ggaaagtgag ttcagagttt attctagtgc gaataattgc acttttgaat atgtctctca

22081 gccttttctt atggaccttg aaggaaaaca gggtaatttc aaaaatctta gggaatttgt

22141 gtttaagaat attgatggtt attttaaaat atattctaag cacacgccta ttaatttagt

22201 gcgtgatctc cctcagggtt tttcggcttt agaaccattg gtagatttgc caataggtat

22261 taacatcact aggtttcaaa ctttacttgc tttacataga agttatttga ctcctggtga

22321 ttcttcttca ggttggacag ctggtgctgc agcttattat gtgggttatc ttcaacctag

22381 gacttttcta ttaaaatata atgaaaatgg aaccattaca gatgctgtag actgtgcact

22441 tgaccctctc tcagaaacaa agtgtacgtt gaaatccttc actgtagaaa aaggaatcta

22501 tcaaacttct aactttagag tccaaccaac agaatctatt gttagatttc ctaatattac

22561 aaacttgtgc ccttttggtg aagtttttaa cgccaccaga tttgcatctg tttatgcttg

22621 gaacaggaag agaatcagca actgtgttgc tgattattct gtcctatata attccgcatc

22681 attttccact tttaagtgtt atggagtgtc tcctactaaa ttaaatgatc tctgctttac

22741 taatgtctat gcagattcat ttgtaattag aggtgatgaa gtcagacaaa tcgctccagg

22801 gcaaactgga aagattgctg attataatta taaattacca gatgatttta caggctgcgt

22861 tatagcttgg aattctaaca atcttgattc taaggttggt ggtaattata attacctgta

22921 tagattgttt aggaagtcta atctcaaacc ttttgagaga gatatttcaa ctgaaatcta

22981 tcaggccggt agcacacctt gtaatggtgt tgaaggtttt aattgttact ttcctttaca

23041 atcatatggt ttccaaccca ctaatggtgt tggttaccaa ccatacagag tagtagtact

23101 ttcttttgaa cttctacatg caccagcaac tgtttgtgga cctaaaaagt ctactaattt

23161 ggttaaaaac aaatgtgtca atttcaactt caatggttta acaggcacag gtgttcttac

23221 tgagtctaac aaaaagtttc tgcctttcca acaatttggc agagacattg ctgacactac

23281 tgatgctgtc cgtgatccac agacacttga gattcttgac attacaccat gttcttttgg

23341 tggtgtcagt gttataacac caggaacaaa tacttctaac caggttgctg ttctttatca

23401 ggatgttaac tgcacagaag tccctgttgc tattcatgca gatcaactta ctcctacttg

23461 gcgtgtttat tctacaggtt ctaatgtttt tcaaacacgt gcaggctgtt taataggggc

23521 tgaacatgtc aacaactcat atgagtgtga catacccatt ggtgcaggta tatgcgctag

23581 ttatcagact cagactaatt ctcctcggcg ggcacgtagt gtagctagtc aatccatcat

23641 tgcctacact atgtcacttg gtgcagaaaa ttcagttgct tactctaata actctattgc

23701 catacccaca aattttacta ttagtgttac cacagaaatt ctaccagtgt ctatgaccaa

23761 gacatcagta gattgtacaa tgtacatttg tggtgattca actgaatgca gcaatctttt

23821 gttgcaatat ggcagttttt gtacacaatt aaaccgtgct ttaactggaa tagctgttga

23881 acaagacaaa aacacccaag aagtttttgc acaagtcaaa caaatttaca aaacaccacc

23941 aattaaagat tttggtggtt ttaatttttc acaaatatta ccagatccat caaaaccaag

24001 caagaggtca tttattgaag atctactttt caacaaagtg acacttgcag atgctggctt

24061 catcaaacaa tatggtgatt gccttggtga tattgctgct agagacctca tttgtgcaca

24121 aaagtttaac ggccttactg ttttgccacc tttgctcaca gatgaaatga ttgctcaata

24181 cacttctgca ctgttagcgg gtacaatcac ttctggttgg acctttggtg caggtgctgc

24241 attacaaata ccatttgcta tgcaaatggc ttataggttt aatggtattg gagttacaca

24301 gaatgttctc tatgagaacc aaaaattgat tgccaaccaa tttaatagtg ctattggcaa

24361 aattcaagac tcactttctt ccacagcaag tgcacttgga aaacttcaag atgtggtcaa

24421 ccaaaatgca caagctttaa acacgcttgt taaacaactt agctccaatt ttggtgcaat

24481 ttcaagtgtt ttaaatgata tcctttcacg tcttgacaaa gttgaggctg aagtgcaaat

24541 tgataggttg atcacaggca gacttcaaag tttgcagaca tatgtgactc aacaattaat

24601 tagagctgca gaaatcagag cttctgctaa tcttgctgct actaaaatgt cagagtgtgt

24661 acttggacaa tcaaaaagag ttgatttttg tggaaagggc tatcatctta tgtccttccc

24721 tcagtcagca cctcatggtg tagtcttctt gcatgtgact tatgtccctg cacaagaaaa

24781 gaacttcaca actgctcctg ccatttgtca tgatggaaaa gcacactttc ctcgtgaagg

24841 tgtctttgtt tcaaatggca cacactggtt tgtaacacaa aggaattttt atgaaccaca

24901 aatcattact acagacaaca catttgtgtc tggtaactgt gatgttgtaa taggaattgt

24961 caacaacaca gtttatgatc ctttgcaacc tgaattagac tcattcaagg aggagttaga

25021 taaatatttt aagaatcata catcaccaga tgttgattta ggtgacatct ctggcattaa

25081 tgcttcagtt gtaaacattc aaaaagaaat tgaccgcctc aatgaggttg ccaagaattt

25141 aaatgaatct ctcatcgatc tccaagaact tggaaagtat gagcagtata taaaatggcc

25201 atggtacatt tggctaggtt ttatagctgg cttgattgcc atagtaatgg tgacaattat

25261 gctttgctgt atgaccagtt gctgtagttg tctcaagggc tgttgttctt gtggatcctg

25321 ctgcaaattt gatgaagacg actctgagcc agtgctcaaa ggagtcaaat tacattacac

25381 ataaacgaac ttatggattt gtttatgaga atcttcacaa ttggaactgt aactttgaag

25441 caaggtgaaa tcaaggatgc tactccttca gattttgttc gcgctactgc aacgataccg

25501 atacaagcct cactcccttt cggatggctt attgttggcg ttgcacttct tgctgttttt

25561 cagagcgctt ccaaaatcat aaccctcaaa aagagatggc aactagcact ctccaagggt

25621 gttcactttg tttgcaactt gctgttgttg tttgtaacag tttactcaca ccttttgctc

25681 gttgctgctg gccttgaagc cccttttctc tatctttatg ctttagtcta cttcttgcag

25741 agtataaact ttgtaagaat aataatgagg ctttggcttt gctggaaatg ccgttccaaa

25801 aacccattac tttatgatgc caactatttt ctttgctggc atactaattg ttacgactat

25861 tgtatacctt acaatagtgt aacttcttca attgtcatta cttcaggtga tggcacaaca

25921 agtcctattt ctgaacatga ctaccagatt ggtggttata ctgaaaaatg ggaatctgga

25981 gtaaaagact gtgttgtatt acacagttac ttcacttcag actattacca gctgtactca

26041 actcaattga gtacagacac tggtgttgaa catgttacct tcttcatcta caataaaatt

26101 gttgatgagc ctgaagaaca tgtccaaatt cacacaatcg acggttcatc cggagttgtt

26161 aatccagtaa tggaaccaat ttatgatgaa ccgacgacga ctactagcgt gcctttgtaa

26221 gcacaagctg atgagtacga acttatgtac tcattcgttt cggaagagac aggtacgtta

26281 atagttaata gcgtacttct ttttcttgct ttcgtggtat tcttgctagt tacactagcc

26341 atccttactg cgcttcgatt gtgtgcgtac tgctgcaata ttgttaacgt gagtcttgta

26401 aaaccttctt tttacgttta ctctcgtgtt aaaaatctga attcttctag agttcctgat

26461 cttctggtct aaacgaacta aatattatat tagtttttct gtttggaact ttaattttag

26521 ccatggcaga ttccaacggt actattaccg ttgaagagct taaaaagctc cttgaacaat

26581 ggaacctagt aataggtttc ctattcctta catggatttg tcttctacaa tttgcctatg

26641 ccaacaggaa taggtttttg tatataatta agttaatttt cctctggctg ttatggccag

26701 taactttagc ttgttttgtg cttgctgctg tttacagaat aaattggatc accggtggaa

26761 ttgctatcgc aatggcttgt cttgtaggct tgatgtggct cagctacttc attgcttctt

26821 tcagactgtt tgcgcgtacg cgttccatgt ggtcattcaa tccagaaact aacattcttc

26881 tcaacgtgcc actccatggc actattctga ccagaccgct tctagaaagt gaactcgtaa

26941 tcggagctgt gatccttcgt ggacatcttc gtattgctgg acaccatcta ggacgctgtg

27001 acatcaagga cctgcctaaa gaaatcactg ttgctacatc acgaacgctt tcttattaca

27061 aattgggagc ttcgcagcgt gtagcaggtg actcaggttt tgctgcatac agtcgctaca

27121 ggattggcaa ctataaatta aacacagacc attccagtag cagtgacaat attgctttgc

27181 ttgtacagta agtgacaaca gatgtttcat ctcgttgact ttcaggttac tatagcagag

27241 atattactaa ttattatgag gacttttaaa gtttccattt ggaatcttga ttacatcata

27301 aacctcataa ttaaaaattt atctaagtca ctaactgaga ataaatattc tcaattagat

27361 gaagagcaac caatggagat tgattaaacg aacatgaaaa ttattctttt cttggcactg

27421 ataacactcg ctacttgtga gctttatcac taccaagagt gtgttagagg tacaacagta

27481 cttttaaaag aaccttgctc ttctggaaca tacgagggca attcaccatt tcatcctcta

27541 gctgataaca aatttgcact gacttgcttt agcactcaat ttgcttttgc ttgtcctgac

27601 ggcgtaaaac acgtctatca gttacgtgcc agatcagttt cacctaaact gttcatcaga

27661 caagaggaag ttcaagaact ttactctcca atttttctta ttgttgcggc aatagtgttt

27721 ataacacttt gcttcacact caaaagaaag acagaatgat tgaactttca ttaattgact

27781 tctatttgtg ctttttagcc tttctgctat tccttgtttt aattatgctt attatctttt

27841 ggttctcact tgaactgcaa gatcataatg aaacttgtca cgcctaaacg aacatgaaat

27901 ttcttgtttt cttaggaatc atcacaactg tagctgcatt tcaccaagaa tgtagtttac

27961 agtcatgtac tcaacatcaa ccatatgtag ttgatgaccc gtgtcctatt cacttctatt

28021 ctaaatggta tattagagta ggagctagaa aatcagcacc tttaattgaa ttgtgcgtgg

28081 atgaggctgg ttctaaatca cccattcagt acatcgatat cggtaattat acagtttcct

28141 gtttaccttt tacaattaat tgccaggaac ctaaattggg tagtcttgta gtgcgttgtt

28201 cgttctatga agacttttta gagtatcatg acgttcgtgt tgttttagat ttcatctaaa

28261 cgaacaaact aaaatgtctg ataatggacc ccaaaatcag cgaaatgcac cccgcattac

28321 gtttggtgga ccctcagatt caactggcag taaccagaat ggagaacgca gtggggcgcg

28381 atcaaaacaa cgtcggcccc aaggtttacc caataatact gcgtcttggt tcaccgctct

28441 cactcaacat ggcaaggaag accttaaatt ccctcgagga caaggcgttc caattaacac

28501 caatagcagt ccagatgacc aaattggcta ctaccgaaga gctaccagac gaattcgtgg

28561 tggtgacggt aaaatgaaag atctcagtcc aagatggtat ttctactacc taggaactgg

28621 gccagaagct ggacttccct atggtgctaa caaagacggc atcatatggg ttgcaactga

28681 gggagccttg aatacaccaa aagatcacat tggcacccgc aatcctgcta acaatgctgc

28741 aatcgtgcta caacttcctc aaggaacaac attgccaaaa ggcttctacg cagaagggag

28801 cagaggcggc agtcaagcct cttctcgttc ctcatcacgt agtcgcaaca gttcaagaaa

28861 ttcaactcca ggcagcagta ggggaacttc tcctgctaga atggctggca atggcggtga

28921 tgctgctctt gctttgctgc tgcttgacag attgaaccag cttgagagca aaatgtctgg

28981 taaaggccaa caacaacaag gccaaactgt cactaagaaa tctgctgctg aggcttctaa

29041 gaagcctcgg caaaaacgta ctgccactaa agcatacaat gtaacacaag ctttcggcag

29101 acgtggtcca gaacaaaccc aaggaaattt tggggaccag gaactaatca gacaaggaac

29161 tgattacaaa cattggccgc aaattgcaca atttgccccc agcgcttcag cgttcttcgg

29221 aatgtcgcgc attggcatgg aagtcacacc ttcgggaacg tggttgacct acacaggtgc

29281 catcaaattg gatgacaaag atccaaattt caaagatcaa gtcattttgc tgaataagca

29341 tattgacgca tacaaaacat tcccaccaac agagcctaaa aaggacaaaa agaagaaggc

29401 tgatgaaact caagccttac cgcagagaca gaagaaacag caaactgtga ctcttcttcc

29461 tgctgcagat ttggatgatt tctccaaaca attgcaacaa tccatgagca gtgctgactc

29521 aactcaggcc taaactcatg cagaccacac aaggcagatg ggctatataa acgttttcgc

29581 ttttccgttt acgatatata gtctactctt gtgcagaatg aattctcgta actacatagc

29641 acaagtagat gtagttaact ttaatctcac atagcaatct ttaatcagtg tgtaacatta

29701 gggaggactt gaaagagcca ccacattttc accgaggcca cgcggagtac gatcgagtgt

29761 acagtgaaca atgctaggga gagctgccta tatggaagag ccctaatgtg taaaattaat

29821 tttagtagtg ctatccccat gtgattttaa tagcttctta ggagaatgac aaaaaaaaaa

29881 aaaaaaaaaa aaaaaaaaaa aaa

治験依頼者・治験責任医師・IRB

2020年3月18日

新型コロナウイルスCOVID-19による感染症が蔓延しつつある。

コロナウイルスに試験施設の人員の感染や被験者の感染などが発生した場合、試験施設の閉鎖、移動制限、治験薬のためのサプライチェーンの障害などが生じうる。このような場合には、プロトコールの変更は避けられない。

COVID-19による影響は、試験中の疾患の特徴、試験デザイン、試験が実施されている地域など多数の要因によって起こりうる。

代替プロセスの実施は可能な限りプロトコールと一致しているべきであり、治験依頼者及び治験責任医師は、実施した不測の措置の理由を文書化すべきである。治験依頼者及び治験責任医師は、COVID-19に関連した制限が、どのように試験実施の変更とその変更の期間につながったのかを文書化し、どの試験参加者が影響を受けたのか、どのように影響を受けたのかを示すべきである。

試験の評価については、電話連絡やバーチャルな訪問などで代替できる方法により実施、また、もはや治験薬や試験サイトにアクセスする必要のない参加者には追加の安全性モニタリングを実施すること

試験の受診スケジュールの変更、欠席、または患者の中止は、情報の欠落につながる可能性がある(例えば、プロトコールで指定された手技の場合)。症例報告書には、欠落したプロトコール指定情報(例えば、COVID-19による試験受診の欠席や試験中止など)のCOVID-19との関係を含め、欠落したデータの根拠を説明する具体的な情報を記載することが重要であろう。臨床試験報告書にまとめられたこの情報は、治験依頼者及びFDAにとって有用である。

臨床現場での予定された診察に大きな影響が出る場合、通常、自己投与で配布されているような特定の治験薬は、代替の安全な投与方法を利用することが可能であるかもしれない。通常、医療環境で投与される他の治験薬については、代替投与(例えば、訓練を受けているが研究者以外 の職員による訪問看護や代替施設など)の計画についてFDAの審査部門に相談することが推奨される。いずれの場合も、治験薬の説明責任を維持するための既存の規制要件は依然として残っており、これに対処し、文書化すべきである。

有効性評価については、可能であれば、バーチャル評価の利用、評価の遅延、研究特異的検体の代替採取等、有効性評価のプロト コルの変更について、適切な審査部門との協議を行うことを推奨する。有効性評価項目が収集されなかった個別の事例については、有効性評価を取得できなかった理由を文書化する(例:COVID-19 によ る具体的な制限事項を明らかにし、プロトコールで指定された評価を実施できなかった理由を明らかにする)。

治験依頼者、治験責任医師、及び IRB は、試験実施施設における COVID-19 対照措置の結果として試験が中断される可能性がある場合に、試験参加者を保護し、 試験の実施を管理するために使用するアプローチを記述するための方針と手順の確立と実施、又は既存の方針と 手順の改訂を検討すべきである。方針と手順の変更は、インフォームド・コンセントのプロセス、試験の訪問と手順、データ収集、試験のモニ タリング、有害事象の報告、及び渡航制限、検疫措置、または COVID-19 疾病そのものに起因する治験責任医師、治験実施施設のスタッフ、及び/またはモニターの変更への影響に 対処しうるが、これらに限定されるものではない。方針と手順は、COVID-19の管理と管理のために適用される(地域または国の)方針に準拠しているべきである。上記の変更の性質に応じて、適用される規則の下でプロトコルの修正が要求されることがあります。

以上は一部抜粋の情報です。詳細はオリジナルをご参照ください。

EMAも治験依頼者向けガイダンスを3月20日付で公開

PMDAも関連のQAを3月30日付で公開

各施設での対応状況(5月14日付)

最近安倍総理大臣もこの言葉を覚えた模様で「日本版CDC」をというような使い方がなされています。業界の人にとっては、ガイドラインを出しているのでその周辺でお目にかかることが多いのではないかと思います。私は、1995年公開の映画「アウトブレイク」で初めて耳にしました。1999年に留学したFDAとは、同じHHSの下部組織、つまりFDAとCDCは組織図上は横並びでした。CDCはどういった仕事をしているのでしょうか?業務は多岐にわたります。CDCの仕事を身近に感じたのは、1999年留学した年に、テレビで「鳥の死骸から西ナイルウイルスが検出された」という発表でした。米国には鳥の死骸のウイルス検査をしている行政機関があることに驚きました。その後ニューヨークでは夜間にヘリコプターで住宅街に殺虫剤をまくというプロジェクトが放送されていました。夜間に殺虫剤をまくので窓を閉めて寝るようにという放送がされていました。テレビの放送によると、「人体には無害な殺虫剤をまくが、念のため窓を閉めておくように」という事でした。

次に私が目にしたのは、FDAのCBERと共同してワクチンの副反応を集めて集計している業務です。パピローマウイルスワクチン、いわゆる「子宮頸がんワクチン」を知り合いたちと集計したのを覚えています。当時日本では上市前でしたが、すでに上市されていた米国のデータではStill病のような副反応が報告されているという内容でした。

そして今回の新型コロナウイルス肺炎の騒ぎで、伝染病のサーベイランスと結果次第では早めの対応を日本でもできるようにという事で、「日本版CDC」をという議論が出てきているのだろうと思います。ただ、良く判らないのがその機能を担う機関が日本に全くないのかというとそういう訳でもないように思います。それは、さておき感染症のサーベイランスはどんな風にデータを評価しているのか少し調べてみました。Getting started with outbreak detection (アウトブレイクを検出することから始めよう)という論文によるとCDC algorithmという手法があります。新たに発症した症例数を週ごとに集計して、過去の発症例数と比較して変動の範囲を超えて感染者が増加した場合に、そのシグナルを検出する手法です。どんなふうになるのか、論理的な事や数式が出てきて良く判らなくなるよりは、実際のCOVID-19の日本のデータ(3月10日までの集計)を流し込んでどういったアウトプットが得られるのか、ながめてみることにしました。

図はFarringtonアルゴリズムの出力です。四角い柱で1日ごとの度数が表示されています。右端のピークが3月6日。初めのアラートが1月28日(3例報告の日)。

実は、CDCが感染の流行をモニターしているアルゴリズムは週ごとのデータしか解析しないということで、今回のような短期のデータを日ごとで集計しようとするとエラーになりました。そこで教科書にCDCのアルゴリズムと列挙されていましたもう一つのアルゴリズム Farringtonのアルゴリズムで集計してみました。とりあえず、過去50日分の報告件数から、変動の範囲内なのか流行の兆しなのかを計算しているようです。この手法は、日ごろから散発的に報告があるような疾患で、急に増えて流行の兆しではないかというのを早期に発見する目的で使用するのに適している様で、COVID-19の様に全く新しい疾患に対しては、この手法は向いていないように思いました。残念。

A statistical algorithm for the early detection of outbreaks of infectious disease, Farrington, C.P.,Andrews, N.J, Beale A.D. and Catchpole, M.A. (1996), J. R. Statist. Soc. A, 159, 547-563.

https://www.jstor.org/stable/2983331?seq=1

library(“surveillance”)

library(readxl)

X0020_STS <- read_excel(“COVID_STS.xlsx”)

CovidDisProg <- create.disProg(week = X0020_STS$week,

observed = X0020_STS$observed,

state = X0020_STS$state,

start = c(2018, 1),

freq=365,

epochAsDate=TRUE)

#Do surveillance for the last 50 days.

n <- length(CovidDisProg$observed)

#Set control parameters.

control <- list(b=2,w=3,range=(n-50):n,reweight=TRUE, verbose=FALSE,alpha=0.01)

res <- algo.farrington(CovidDisProg,control=control)

#Plot the result.

plot(res,disease=”COVID-19″,method=”Farrington”)

# sts.cdc <- algo.cdc(CovidDisProg, control = control)

# <<<error>>> algo.cdc only works for weekly data.

# plot(sts.cdc, legend.opts=NULL)

使用した集計済ファイル

https://gis.jag-japan.com/covid19jp/?fbclid=IwAR3FWAcLkQvXDTUUMtNwm7qRFhplIxSREML5m-rrPXWzfz7IxVANOBdSeSY

COVID-19の原因ウイルスであるSARS-CoV-2のMproというタンパク質の構造がPDBに公開されていました。エントリは6LU7。PDBの解説の書き方からすると、このウイルスのタンパク質で他にも構造が決定されている分子があるけれども、(無料で無条件に)公開されているのはこれだけだという様に取れます*1。新しいものを発見したとして、その知財を囲い込んで自分達の金儲けにするというのは20世紀的なアメリカンドリームであって、ものが不足していた頃の考え方で時代遅れでないかと。発見したもので社会の様々な問題を解決したり、世の中を良くする様にというような考え方が広がらないかなぁ。それはさておき、タンパク質の発現にバキュロと昆虫細胞を使った系が多い中、このタンパク質は私が慣れ親しんだBL21(DE3)で発現しています。公開されたものを見てみましょう。(レンダリングは私がしましたが、構造はPDB 6LU7を使用しています)

*1 その後多くの構造が公開されています。ACE2とウイルスのタンパク質の結合についてを別記事に追加しました。(2020.04.11)

SARS-CoV-2コロナウイルス3CLヒドロラーゼ(Mpro)のタンパク質で、登録された構造全体(1分子分)を表示しています。Mpro(白)以外にオリゴペプチド様の阻害薬(薄紫)が一緒に含まれています。

阻害薬付近が見えやすいように薄切り(Slab)にしてみます。

両分子間で水素結合がありそうなところを見やすい表示にしてみました。ちゃんとはまり込んでいるような感じになっています。

PDB newsによると、「PDBアーカイブのエントリーと比較すると、少なくとも90%の配列相同性を持つ蛋白質が95件のPDBエントリーで同定されました。さらに、これらの関連蛋白質構造には、約30個の異なる低分子阻害薬が含まれており、新薬の発見に役立つ可能性があります。」とのこと。それらの阻害薬は治療薬の開発の出発点になるかもしれません。

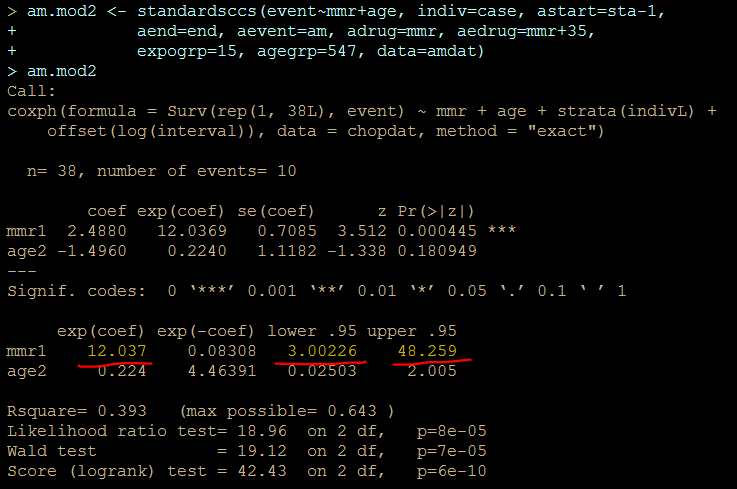

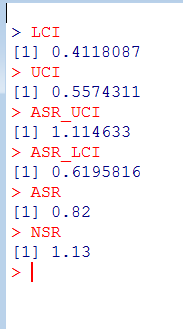

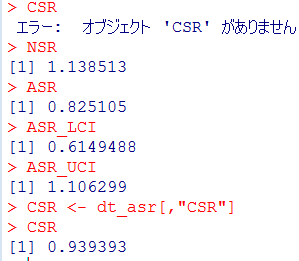

Self-controlled case series (SCCS) の解析方法を試しはじめてしばらく時間が経過しました。その間に、このSCCSを計算するためのRのパッケージが開発されていまして、一見したところ便利そうです。とりあえず、ご多分に漏れず、このパッケージを利用して、過去にやった方法や論文1と同じ結果が得られるのか試してみました。

install("SCCS")

適切なサーバーを選択してインストールが完了するのを待ちます。

library("SCCS")

以前Observation Islandで試したoxfordデータが,このパッケージではamdatという名前で含まれています。どんな内容か確認してみます。

amdat case sta end am mmr 1 1 366 730 398 458 2 2 366 730 399 750 3 3 366 730 413 392 4 4 366 730 449 429 5 5 366 730 455 433 6 6 366 730 472 432 7 7 366 730 474 395 8 8 366 730 485 470 9 9 366 730 524 496 10 10 366 730 700 428

良く似ているけど微妙に調整が必要そうです

| indiv | eventday | start | end | exday |

| 1 | 398 | 365 | 730 | 458 |

| 2 | 413 | 365 | 730 | 392 |

| 3 | 449 | 365 | 730 | 429 |

| 4 | 455 | 365 | 730 | 433 |

| 5 | 472 | 365 | 730 | 432 |

| 6 | 474 | 365 | 730 | 395 |

| 7 | 485 | 365 | 730 | 470 |

| 8 | 524 | 365 | 730 | 496 |

| 9 | 700 | 365 | 730 | 428 |

| 10 | 399 | 365 | 730 | 716 |

前回試したoxfordデータでは開始日が365でした。このパッケージのamdatでは366に。うるう年にかかってしまったかな?などと思うのも良いのですが、とりあえず同じデータで開始したいので、試行する間はパラメータを投入する際にこの部分を前回と同じになるように調整。具体的にはスクリプトでパラメータを与える際に、

astart=sta

と与えるべきところを

astart=sta-1

にして調整しました。

前回試したoxfordデータでは症例10の接種日は716日目だったのが、今回パッケージ附属のamdatでは同じ症例が「症例2」になっていて,接種日が750日目になっていました。とりあえずここも、前回に合わせておきたいので

amdat[2,5] <- 716

とやります。なんだか、細かい質の部分に齟齬が残っているな。

上記2点の調整を含むスクリプトは次のようになります。

library("SCCS")

amdat[2,5] <- 716

am.mod2 <- standardsccs(event~mmr+age, indiv=case, astart=sta-1,

aend=end, aevent=am, adrug=mmr, aedrug=mmr+35,

expogrp=15, agegrp=547, data=amdat)

am.mod2

解りにくいかもしれませんが、mmrのオッズ比が12.037 (95%CI, 3.00226, 48.259)となりました。

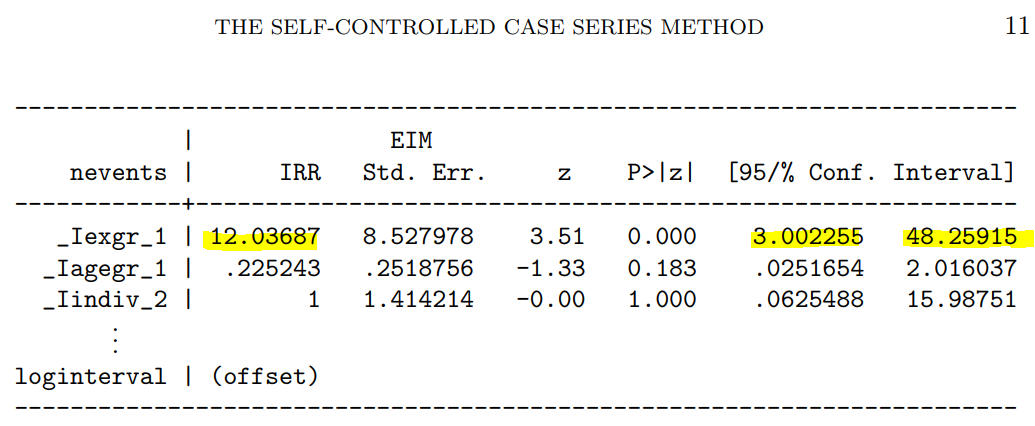

次に示すのが論文で掲載された結果です。今回の集計結果と論文の結果で、小数点以下2桁くらいまでは一致しています。誤差範囲と考えられますので、今回試したRのSCCSパッケージが思ったように機能することが確認できました。投入する前のデータさえきちんとしていたら、使えそうだという印象を持ちました。

新規インフルエンザ治療薬に耐性を持つというウイルスが、その新規治療薬を使用した患者さんから検出されたという事で、2018.1.25にニュースが流れました。その治療薬は、キャップ依存性エンドヌクレアーゼ (cap-dependent endonuclease) 阻害薬で、一般名バロキサビルマルボキシル (baloxavir marboxil) と呼ばれる医薬品です。ニュースではどういった検査をして「耐性」を確定したかは述べられていませんでした。ソースの情報によるとターゲット分子であるキャップ依存性エンドヌクレアーゼの変異であるPA I38T、I38F等を監視していることが述べられています。これらの変異はNCBIのデータベースに以前より存在していますので、普通に世の中にいるウイルスが持っていた変異だろうと思われます。そして、治験時には10%弱の被験者にこの変異が見られました。治験の情報によりますと、この変異を持っているウイルスにかかった被験者では、治療5日目、9日目にウイルスが検出された割合が野生型のウイルスにかかった被験者より高くなりました。1

この治験データで少し目に留まるのは、プラセボ治療群より変異ウイルスに対する実薬治療群の方がウイルスが検出される被験者の割合が高くなる様に見える点です。力価のグラフを見ていると、耐性ウイルスは一旦力価が低下した後、6日目あたりに再び力価が上昇するようなカーブを描いて見えます。これらのデータが、臨床的に深刻な問題なのかは何とも言えません。詳しく知りたい方は原著1や販売メーカーの資材を見てご検討ください。

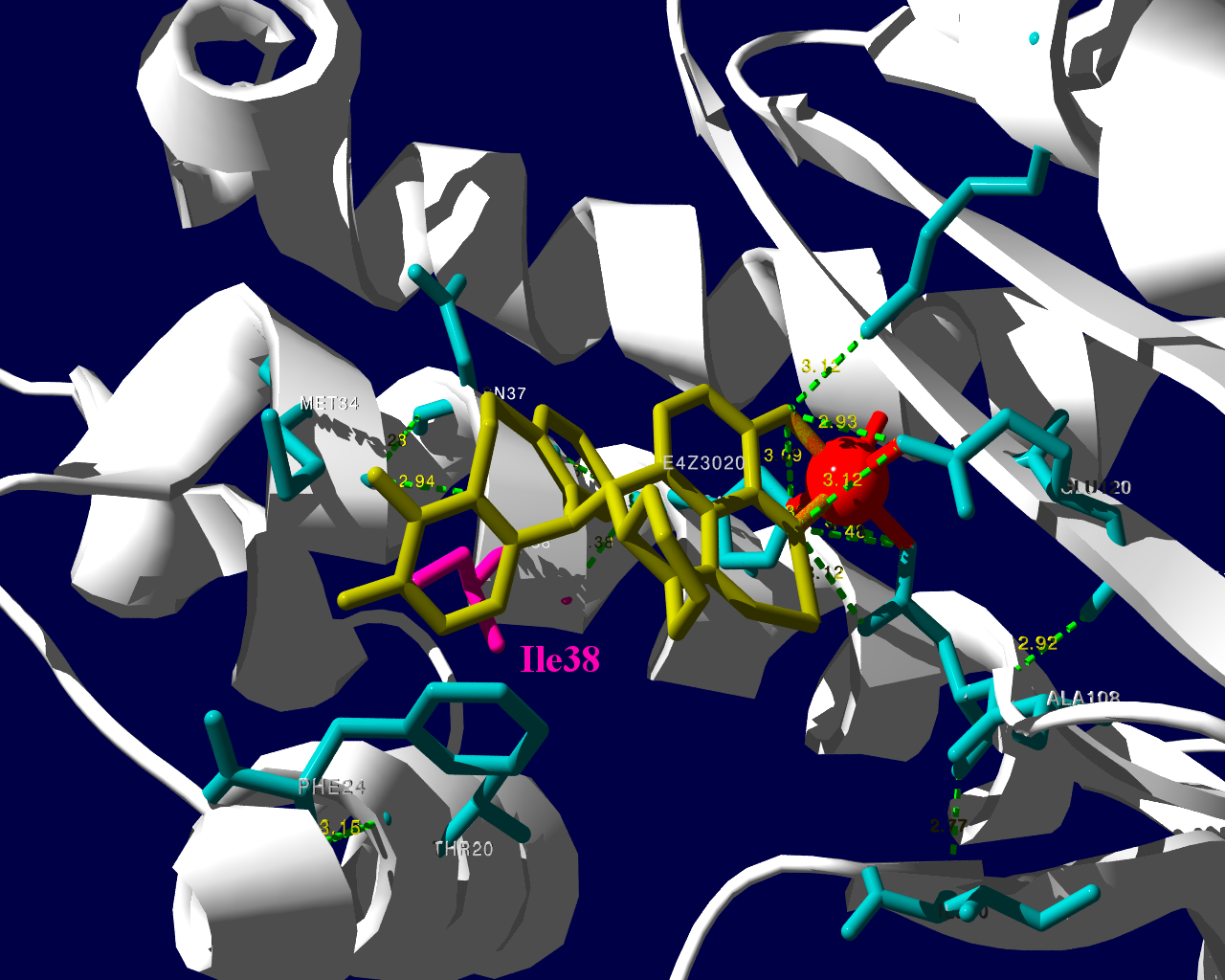

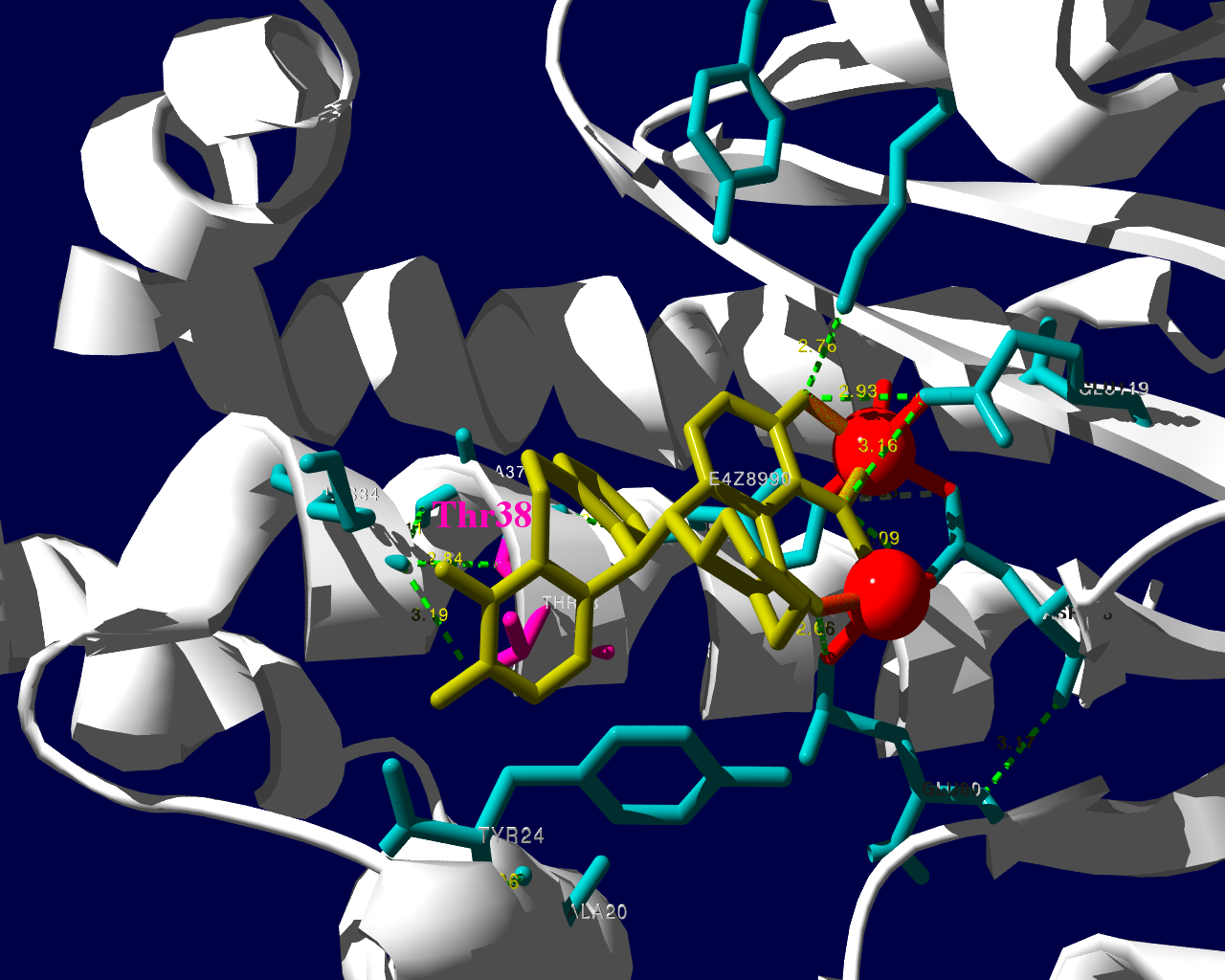

PA分子の結晶構造が公開されています2ので、これを利用し耐性のメカニズムに迫る。とか言うたいそうな話ではなく、とりあえず眺めてみました。黄色がbaloxavir acidです。(医薬品のbaloxavir marboxilとは、若干構造が違います)青が活性中心付近に側鎖を出しているアミノ酸残基。そして、ピンクが変異がある場所です。

I38Tの変異があっても、作用点にbaloxavir acidが一見きちんとハマっているようです。水素結合を作っているであろう原子間の距離が微妙に違うので、ファンデルワールス力が違い、結合の強さに差が出るのかもしれません。科学的に厳密なディスカッションは、論文2をご確認ください。

お遊びで、いつもより多めに回してみました。

国内の副作用データベースであるJADERを用いた解析の結果を論文として公表しました。この論文は2017年に公表したもので、ごく最近出版という訳ではありません。出版された際に著者には無料で見ることのできるリンクが提供されています。そのリンクは個人で楽しむ目的で使用するものと思っていましたが、Circulationのインストラクションを読んでいましたところ、自身のホームページにリンクを張ることが許可されている事が判明しました。ですので、個人ブログにそのリンクを張ります。

…Circulation誌のインストラクション Q&Aパート…

Q—Can I post my article on the Internet?

A—Corresponding authors will receive “toll-free” links to their published article. This URL can be placed on an author’s personal or institutional web site. Those who click on the link will be able to access the article as it published online in the AHA journal (with or without a subscription). Should coauthors or colleagues be interested in viewing the article for their own use, authors may provide them with the URL; a copy of the article may not be forwarded electronically.

…

<http://circ.ahajournals.org/content/135/8/815>

Yasuo Oshima, Tetsuya Tanimoto, Koichiro Yuji, Arinobu TojoAssociation Between Aortic Dissection and Systemic Exposure of Vascular Endothelial Growth Factor Pathway Inhibitors in the Japanese Adverse Drug Event Report Database

https://doi.org/10.1161/CIRCULATIONAHA.116.025144

Circulation. 2017;135:815-817Originally published February 21, 2017

市販後の副作用報告データベースを解析して、副作用を研究するにあたって、意識しておくべき問題の一つに、アンダーレポーティングがあります。医薬品を製造販売する企業は、その医薬品の副作用情報を知った際には、一定の基準に該当する場合には、設定された期限内に規制当局へ報告することが義務付けられています。(一定の基準とは、自社の医薬品と有害事象の間に因果関係がある、有害事象が重篤である、の2点です。このほかに、「噂(うわさ)」ではなく、副作用を起こしたとされる患者が実在すること、医療目的で使用された医薬品であること、国内で使用された医薬品であることなど周辺の手続き的なコンディションが若干あります。)これに対して、医療現場の主プレーヤーである医師には、「保健衛生上の危害の発生又は拡大を防止するため必要があると認めるとき(薬機法68条の10-2)」に限って当該副作用を報告するように義務付けられています。もちろん、治験や臨床研究で一定基準のものを、どこかへ報告するように定めた契約が存在する場合には、その契約に基づく義務が発生します。

企業で副作用の仕事をしていると、規制当局であるPMDAの査察の際に厳しくチェックされるため、知った副作用情報の報告漏れが無いようにと意識を働かせる動機があります。一方、医師は副作用報告について、発現したものを報告漏れだというような指摘を受ける機会はほとんどなく、実際に発現した副作用を、どこかへ報告するという、動機が働きません。どこへも報告されない副作用が、だれに知られることもなく埋もれてしまう、というようなことも十分想定されます。その、発現しているにもかかわらず、規制当局に報告されない副作用が一定程度存在することをアンダーレポーティングと言っています。

アンダーレポーティングがある事で、規制当局や製造販売元企業がリスクに気づくのに遅れる可能性があります。また、様々な集計の際にしばしば2つ以上の何かを比較します。例えばA薬とB薬を比較する時に、この2つの薬剤で同じ頻度でアンダーレポーティングが起きているという前提がないと厳密になりません。この前提が正しいかどうかを明らかにするのは難しいものです。

感覚としては、起きた副作用がすべて規制当局に報告されているとことはないと思いますが、実際にそういう事が起きているというのが科学的に記述されているでしょうか。この点について、臨床現場の先生が有害事象を選んで報告していることを明らかにした英国及びアイルランドからの研究報告があります1,2。

また、スロベニアで一次市中病院から四次紹介先病院までの入院カルテをレビューした報告によると、医薬品の副作用による入院を医師が認識していても、コーディングしてデータを登録して報告することはまれでした3。

副作用が起きたとして、全てが規制当局に集約される様な仕組みではないので、仕方ないというか。全てが報告されることを基準にして、それより低いから「アンダー」という発想が現実からかけ離れたことを想定しているというか。まぁ、医療関係者も忙しいし、報告自体よりその仕組みを理解するのが面倒だったりというのもあり。全体に違和感のある部分ではあるのですが要因を分析して見ないことには、次に繋がらないのでこの辺りも少し調べました。

ベネズエラでの研究グループは、医師が自発報告のシステムについての知識が乏しい事が示され、これがアンダーレポーティングの原因であるという仮説を主張しました4。

スペインからの報告5では、

がアンダーレポーティングの要因として提唱されました。スペインからのもう一つの報告6では

がアンダーレポーティングの要因として提唱されました。

スペインの報告2の(1)を「関心の低さ」と訳しましたが、原文ではforgetfulnessでした。スペインでは薬剤師が(3)の判断をしているのかと少々驚きました。日本で調剤薬局の薬剤師から受ける報告では、「薬を使った」→「何か症状を訴えている」で、ほぼ何も考えず「副作用である」かのごとき報告がなされます。「副作用名」もほぼ「患者が訴える症状」あるいは、「診断根拠が希薄な病名」です。調剤薬局の薬剤師からの情報は処方箋に書かれた医薬品情報と患者さんとの会話でしか病状を把握できないことがほとんどで、基礎疾患や臨床検査値や治療の経過等の正確な情報が得られることはほとんどありません。その上、多くの場合処方した医師に対して、薬局薬剤師や企業が因果関係を問う事も難しいことが多く、薬局薬剤師の置かれた立場からすると、得られる情報が限定的なのもやむを得ないことです。結果として薬局経由の情報は症例評価には限界があります。

ドイツからの報告7で、メールにより1315名の医師にアンケートした結果によると、規制当局のシステムを使って報告するより、製薬企業を通して報告する方を好む医師が多かったということです。この報告によると、アンダーレポーティングを抑制するには、医師による自発報告を支援する事が提案されました。経済的インセンティブや教育活動によって、臨床家が副作用を報告する活動が改善することを報告した論文は複数あります8–12。副作用を疑ったら、規制当局に報告するという活動があることを知る患者は少なく、患者自身による副作用報告を提案する論文もあります13–15。

インセンティブを厚くすると、その副作用も心配です。容易に想像できるのはそのインセンティブ(お金)目当てで、あまり大したことのない副作用を多数報告する臨床家や、最悪存在しない副作用を報告するものも現れるかもしれません。私としては報告を少々増やすことと引き換えに、これらのノイズを多く混入させることが解析上の困難を引き起こすことを懸念します。

患者自身の副作用報告については、医学的な判断の入った診断と言うよりは主観的な症状が中心になります。患者は副作用を経験したと思ったら、規制当局に報告するより、疑わしい医薬品を処方した医師に相談して、医学的判断を仰ぐので良いのではないでしょうか。忙しそうにしている医師にわざわざ報告するほどでもない、という副作用であれば、規制当局的にも必ずしも重要視しないような病態ではないかと思います。

現在の自発報告の状況は完璧とは程遠いものです。でも、これまでいくつかの薬害を乗り越えて、副作用報告の制度が洗練されてきた医療用医薬品に関しては、深刻なほど、新規の副作用の検出力が低いとも思えません。

医薬品関連の業者Aが、「副作用の収集業務を始めました。サンプルとしてこういう情報を入手しています」として、数件の副作用情報を製薬企業Bに提供しました。薬事法(現薬機法)に基づきますと、製造販売元企業Bが副作用情報を入手した際には規制当局に報告する義務が発生します。ですのでその情報も当局へ報告しました。企業Bも海外本社のグローバルカンパニーで、一定の基準に合致する副作用は、米国FDAや欧州EMAをはじめ各国の規制に従ってそれぞれの規制当局へ報告されました。

製薬企業は、入手した情報について、具体的で詳細な情報を確認に行くように、と規制当局より常々指導されています。当初業者Aより入手した情報に基づき、情報源とされる調剤薬局や卸業者さんへ企業Bの営業担当者がそれぞれ手分けして確認に行きました。すると、行った先では「そのような副作用情報を報告していない」「業者Aが何かないかとしつこく聞くので適当に作り話を言った」というようなケースばかりで、副作用症例が実在しないことが明らかになったのです。ねつ造情報でも、それらしいものを企業に送り付ければ、ビジネスになるというような安易な理解で参入しようとしていたとの結論に至りました。副作用報告の規制の細やかさを理解していれば、虚偽の情報を企業が裏を取らない訳にはいかない事は明白なのですが、その規制すら理解していないようです。

ねつ造情報に基づいて、各地の営業担当者が薬局や卸さんを訪れ、さらに、「そんな情報はない」と言うような話のかみ合わない不快な時間を過ごしました。訪問された方も、業者Aにしつこく副作用の情報を求められ、その後製造販売元のAから再度詳細情報を求められ、と無意味な問い合わせの対応に時間を取られました。企業Bでは日本をはじめ各国の規制当局には、副作用情報を報告した後、さらに、取り下げの手続きを行ったりと大変な無駄な業務を余儀なくされました。その時には業者Aが解りやすくねつ造していましたので、まもなくねつ造が確認できました。でも、情報源である医療関係者が虚偽の報告を(少額の)お金のために作り始めると、なかなか裏を取ることは難しそうです。

<この記事は内科学会雑誌に掲載した記事のセルフアーカイブです。誤字脱字等も含め内容は公開版の最終稿と同一です。>

大島康雄

東京大学医科学研究所 先端医療研究センター・分子療法分野

郵便番号 108-8639

住所 東京都港区白金台4丁目6-1

℡ 03-6301-3845

電子メール 0-oshima@umin.ac.jp

医薬品医療機器総合機構(PMDA)のWebpageによると、2010年1月から11月までの期間に使用上の注意の改訂指示があった医薬品情報は170件あった (http://www.info.pmda.go.jp/kaitei/kaitei_index.html)。 この中でアンジオテンシンII受容体拮抗薬、いわゆるARBである、オルメサルタン メドキソミル、テルミサルタン、バルサルタンおよびそれらを薬効成分として含む合剤の使用上の注意へ、「横紋筋融解症」の副作用の記載をするようにとの指示が私の目を引いた。横紋筋融解症は一旦発現すると死亡に至る割合が1割近くもある深刻な病態である(1)。それに加え、ARBは臨床現場で多くの患者さんへ使用されているため、規制当局からの情報は大きな影響力を有しかねない。しかし、気になったのはそのためだけではない。HMG-CoA還元酵素阻害薬、いわゆるスタチン類では個人的な臨床経験としてあるいは同僚医師らとのコミュニケーションの中で、クレアチンキナーゼ上昇あるいはこれに筋症状等の伴った筋炎と考えられる症例を経験することがあり、スタチンの筋組織に対する傷害性を感じる機会があった。これに対して、ARBではこうした身近な経験をする機会が乏しかったのだ。厚生労働省のWebpage掲載文書である医薬品・医療機器等安全性情報No.271の記載によると、オルメサルタン メドキソミルでは、平成21年度1年間での使用者数おおよそ180万人に対し、集計当時の直近3年間に横紋筋融解症症例のうち因果関係が否定できない症例が1例報告されている、との記載がある。 同じくテルミサルタンでは1年間使用者数190万人に対し直近3年間で3症例、バルサルタンでも同じく410万人に対し4症例との記載である (http://www1.mhlw.go.jp/kinkyu/iyaku_j/iyaku_j/anzenseijyouhou/271-2.pdf)。医薬品・医療機器等安全性情報No.271には改訂指示の根拠となった症例の概要等に関する情報が紹介されている。それぞれの症例の臨床経過を見ると、症例で副作用が報告されていることは理解できるものの、添付文書上で注意喚起を行うという一種のリスクコミュニケーションが必要であると判断したロジックは読み取れない。医薬品・医療機器等安全性情報No.271に記載された数字には、副作用症例を経験された臨床現場の先生方が任意でご報告される、いわゆる自発報告に基づく数字が含まれると考えられる。自発報告の弱点として、発現していても報告されない症例が少なからずあると思われる、いわゆるアンダーレポーティングの問題が指摘されている。言い換えるならば、実際には報告されている症例より多くの有害事象が発現している可能性が考えられる。それにしてもこの程度の報告数であれば身近な経験が情報共有されないのも納得ができる。と同時に、添付文書上で注意喚起を行うというリスクコミュニケーションが本当に必要なのか、情報の受け手としての医師はこの情報をどの様に日々の臨床に生かすことが求められているのか疑問が湧く。

本稿では、医薬品使用中または投与後におきた医療上の好ましくない事象である有害事象が、本当に医薬品が原因で起きた副作用であるかどうか、すなわち有害事象と医薬品の因果関係についてどのような判断の方法があるのかについて、いくつかの例を紹介させていただく。

臨床試験中に生じた有害事象と試験薬との因果関係の評価は、重要な意味を持ちうるにもかかわらず、客観的で確立された基準があるとは言えない。疾患の経過中に起きた有害事象は、被疑薬による副作用のほか、被験者がもともと有していた病態に関連した症状、併用薬剤による事象、治療手技の合併症、それらとは無関係に偶然おこる偶発症などさまざまな可能性がある。原因についてのさまざまな可能性を臨床的に推論してゆく中で、相対的に他の要因が考えにくい場合に被疑薬との因果関係があると考えられる。つまり、いわゆる臨床推論そのものであり、一律に基準を設けるのは困難である。治験中に得られる安全性情報の取り扱いについて、INTERNATIONAL CONFERENCE ON HARMONISATION (ICH) E2Aという国際的ガイドラインがある。その規定によると、薬物投与後に起きた有害事象について、完全に否定することは論理的には困難であるにもかかわらず、「因果関係が否定できない場合に、合理性を以て因果関係の可能性があるとする」との考え方が記載されている(2)。脚注 このように基準となるべき文言についても客観的で一定の判断が常にできる基準とは言い難い。このほかにも考慮するべき点はいくつか報告されている。上記ICH E2Aの記述および、同じく国際的な治験や市販後の医薬品評価にかかわる議論を深めてきた国際医科学団体協議会(CIOMS, council for international organizations of medical science)が、因果関係の判断に関して考慮するべきとして指摘している点を4点以下に列挙する。あるべき姿として、評価できるだけの十分な医療上の情報を得たうえで判断がなされるべきである。

また、有害事象の性質や、被疑薬(代謝物を含む)の類薬についての以下のような情報がもしあれば、これらも含め総合的に因果関係が評価される場合もある。

上述の情報を考慮しても、個別症例の有害事象が被疑薬と因果関係があるのか否かの判断が困難なケースが少なくない。

臨床試験のうち、新医薬品等の承認を得るための臨床試験を治験と呼び、これはいわゆるGCP省令に基づいて行われ、治験届がなされている。治験では、個別症例の有害事象について、治験責任医師等がその因果関係判断を行う。治験責任医師等が当該有害事象と試験薬との因果関係を否定しないと、治験依頼者によって個別症例の副作用として報告等の必要な手続きが検討される。この場合試験が継続中であれば、規制当局および治験に参加している他施設への迅速報告が検討されるとともに、その重要度によっては試験の継続についても検討される。しかしながら、試験が終了し、集積検討をする段階では、個別症例についての因果関係評価に基づいてそのまま、「当該試験薬が当該副作用を引き起こす」というように医薬品が評価されるわけではない。むしろ逆である。Food and Drug Administration (FDA)では審査官(reviewer)向けのガイドブックが作成され公開されている。このFDAレビューワーガイダンスによると、有害事象の報告に責任のある治験責任医師(investigator)あるいは治験依頼者(新医薬品等の申請者としてapplicantの用語で登場する)が行った個別症例についての因果関係判断からは、あまり有用な情報が得られない、あるいは、無視するようにとも取れる記述がみられる。以下に因果関係評価についての記述をFDAのレビューワーガイダンスより引用する。(http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/ucm072974.pdf)

どうして個別症例の因果関係評価を無視するような記述になっているかと言うと、集積検討をする場合には個別症例の因果関係評価とは別の情報が加わるからである。どのような情報かについては、具体例を提示することで解説したい。以下に臨床試験に関する教科書であるStatistical Issues in Drug Developmentからの例を2つほど引用する(3)。元の教科書を参照していただければわかるが、これらの例は架空の例であり、過去に実施された治験についての記録ではない。しかし、本稿では過去に起きたような時制で文章を記述させていただくことをあらかじめお断りしておく。

これらの例で興味深いのは、個別症例の因果関係判断が、集積検討の結果覆る点である。集積検討で得られる情報で重要な情報は、比較対象であるプラセボ群との発現頻度についての情報である。お示ししたような例が論理的にはあり得るため、FDAでは医薬品の有害事象の因果関係判断を行うに当たって、治験責任医師や治験依頼者の行った個別症例の因果関係判断を必ずしもそのままでは受け入れず、7.1.1の死因の記述にある通り頻度を確認するようにしている。頻度が同程度であっても、実薬で早期に発現していないか、重症度が実薬で高くないか等が比較されることもある。

ここで、本稿の趣旨とははずれるが、もう一点強調しておきたい点がある。先の例では集積検討の結果、当初報告してきた治験担当医の因果関係評価は「誤り」となる。にもかかわらず、医薬品評価としては何の問題も生じない点である。開鍵後の集積検討の結果を知ることなしに、それまでの医学的な知識に基づいて、一定の合理的な判断をしている限り、結果として因果関係評価が誤りであったとしても、試験上は問題にはならないのである。筆者の知る限り、国内で試験に参加される先生の中には、こうした「結果として誤り」となることを恐れるあまりか、例1のような場合で、因果関係は絶対に否定しないお考えの先生方もおられる。そうした判断の考え方は例2のような場合には逆に結果として「誤り」となってしまう恐れがあることも考慮されておくべきであろう。治験責任医師らはご自身の知識と経験そして、目の前の患者さんの状況を鑑み、因果関係があるのかないのかを素直に医学的に判断して、それでも「結果として誤り」となることは避けられないものである。

また、臨床試験に参加される先生方のご心配は他にもあるようだ。「因果関係はないと思うが、治験責任医師らが因果関係を否定してしまうと、その事象が誰からも評価されることなく承認審査が行われるのではないか」と懸念され、因果関係を否定することに躊躇する先生方もおられる。これにも、誤解がある。個別症例の因果関係を「なし」とした場合、確かに試験継続中の他施設や規制当局への迅速な報告はなされないであろう。しかし、FDAレビューワーガイダンスに示した通り集積検討の段階では、因果関係が否定されている事象も含め、有害事象のリストに記載され、集計され、規制当局へ報告される。これは国内でも同様である。言い換えるならば個別症例の因果関係を否定したとしても、その有害事象の情報が闇に埋もれてしまう心配はない。

治験責任医師らは、自身の医学的知識と経験にもとづき、目の前の被験者の状況を鑑み、因果関係があるのかないのか、医学的に判断することに専念することが求められる役割であろう。

残念ながら、前述の例のようにプラセボと比較して実薬で発現頻度の高いものを「実薬による」とするように明快な判断ができる有害事象は、条件が整った限られたケースのようである。プラセボの情報が不十分な場合では、治療対象疾患の経過中に起きることが知られている合併症としての事象の頻度や、一般人口中での発症頻度、類薬での発現頻度などを比較の対象として判断する場合もあるかもしれない。いずれにしても上市時に得られている医薬品の副作用情報は限られている。市販後に初めてわかるような安全性情報も少なからずある。開発段階での安全性情報が不十分となる要因を先ほどとは別の教科書から引用する(4)。

このリストの中の問題の多くは、治験では選択基準に合致する限られた被験者に限られた期間のみ投与され、一定の観察期間のみの情報が収集されることから、市販後に起きる状況が十分評価できないのである。この要因の中で異質な点が5番目の要因である。ここに記載されている社会的要求が安全性の情報とのトレードオフとするような考え方に抵抗感を示す先生方もおられるかもしれない。しかし、過度な社会的要求は治験を最低限の期間で、かつ最小の被験者数で進めるような圧力になりかねない因子の一つである。そうした圧力の有無にかかわらず、治験段階での安全性評価は限られた情報であるとの認識に基づいて、市販後に安全性を監視し続けることが臨床医には求められていると考えられる。

市販後の安全性情報にはSolicited とUnsolicitedな情報がある。 Solicitedとは試験や調査等登録患者を一定期間観察して、有害事象が発症しないかを観察する、つまり観察される集団があらかじめ定義されている安全性情報をいう。市販後の情報の報告数を見るとSolicitedな情報も一部にはあるものの、数として多いのは自発報告等のUnsolicitedな情報である。自発報告とは、副作用症例を経験された臨床現場の先生方が任意でご報告される、副作用報告のことである。自発報告は2つの大きな問題を抱えており信頼できる発現頻度を計算することができない。第一は薬物曝露状況つまり、どのくらいの被疑薬投与症例に被疑薬がどのくらいの期間投与されたのか、事象の発現頻度であるincidenceを計算する場合の分母にできる数字がない。分母にできる数字の代替として思いつくものに、出荷数量から推計できる使用患者数がある。これについては、ヨーロッパの規制当局であるEMEA のガイドライン案によると、「すでに市販されている医薬品で、自発報告された有害事象数あるいは有害反応数を分子に、販売数を分母にした報告率は、医薬品使用者での有害反応の発生率の推定値として提示すべきではない。」とあり、少なくともヨーロッパでは好ましくないことと考えられている。出荷数量は流通在庫や期限切れによる廃棄の問題や一人当たりの使用量の推計が、どの程度実臨床の状況を反映されているのかが不明であるといった問題がある。このため分母が不正確にならざるを得ない状況があり、発生率の推定値としては好ましくないとしているのであろう。

第二は、実際に起きている有害事象のうち一部しか報告されていない、つまりアンダーレポートの問題があり、incidenceを計算する場合の分子にできる数字は、実際に事象が起きている件数より小さいと推定できる。そのほかにもいくつかの重要な問題があり以下にリスト化する。

不確実な診断は副作用報告の深刻な問題の一つである。自発報告では通常ごく限られた情報が報告されてくることから、規制当局や製薬企業が診断を確認することは困難である。さらに、報告者が薬局薬剤師、患者やその家族等であった場合は、診断や診断根拠を報告者自身が十分把握していない場合もある。副作用を診断した医師が報告する場合であっても、報告医師の専門分野以外の副作用については診断が正確でない場合が考えられる。また、他の病院などからの転院患者を引き受けて、その後起きた副作用を報告するような場合、前医で治療されていた基礎疾患やその臨床経過についてのデータを十分引き継いでいない場合もある。さらに、自発報告では仮によく知られている絞絡因子が当該症例にあったとしても、報告されてこないかもしれない。

診断自体に困難な点がない場合でも報告事象名の選択が悩ましいケースがある。例えば、高齢者の多発性骨髄腫の患者に化学療法が行われ、その後発熱および下痢を発症した。経過中、腎不全となり、死亡した。このように一連の経過で複数の病態が観察された場合に、報告者が副作用として選択する事象名にはぶれが生じうる。より多くの種類の病態が起きるような場合はさらに事象名の選択は複雑になる。これとは別の問題として、新聞やテレビといったマスメディアでセンセーショナルに取り上げられた副作用については、過去の経験にさかのぼって報告するなどにより急に副作用報告件数が増えることが知られている。また、多くの患者に使用される医薬品は、薬物とは因果関係のない偶発症等の情報を含め、多くの有害事象が報告されてくることになる。

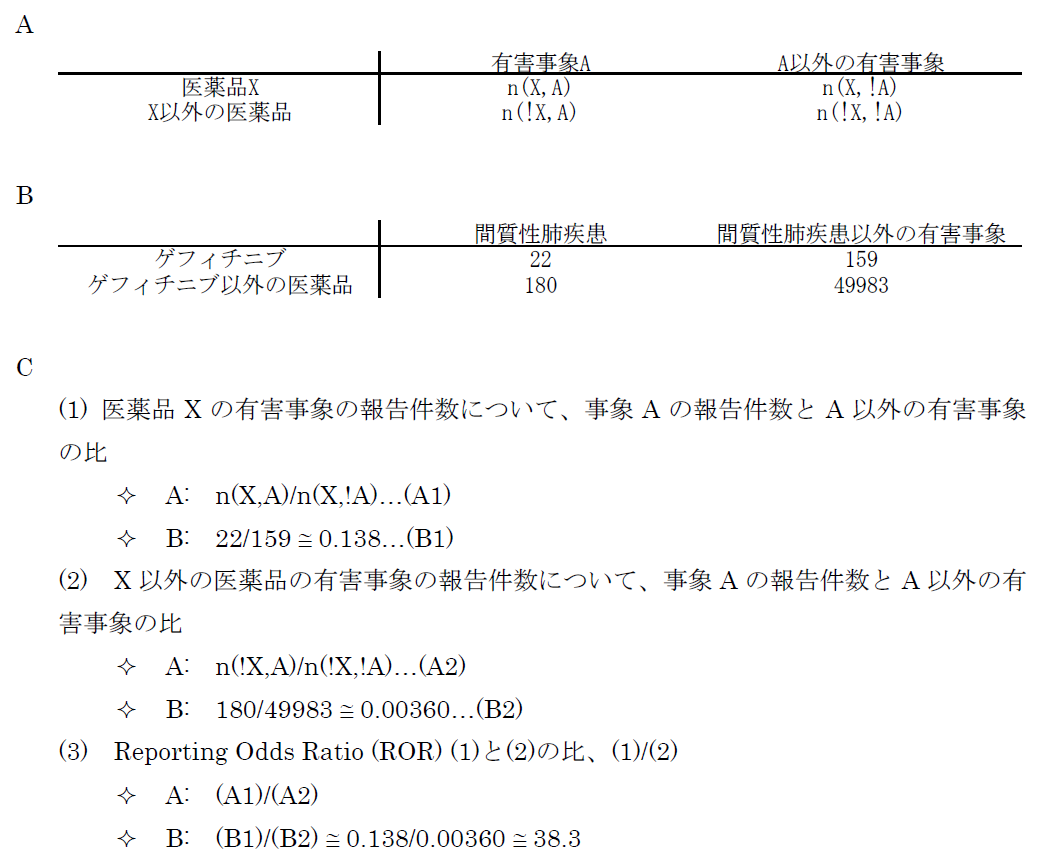

臨床試験では開鍵後に集積検討をすることができるが、自発報告には分母にできる数字や比較対象にできるプラセボ群もない。かといって、集積検討が全くできない訳ではない。世の中で起きている医薬品の副作用について迅速にうかがい知るうえで、現状として最大の情報を蓄積しているのが市販後の自発報告を含む副作用データベースである。前項のような自発報告の欠点があることを把握したうえで、精度は落ちるものの大量のデータから科学的推論をすることは可能であるとされ、医薬品曝露情報(incidence の分母に相当する情報)に代わる何らかの指標を用いる手法がいくつか開発されている。原理のもとにある考え方は単純である。表に示した通り、安全性データベースの中で、興味の対象である医薬品Xについて、興味の対象である有害事象Aが何件報告されているかをn(X,A)と表現する(表 パネルA)。

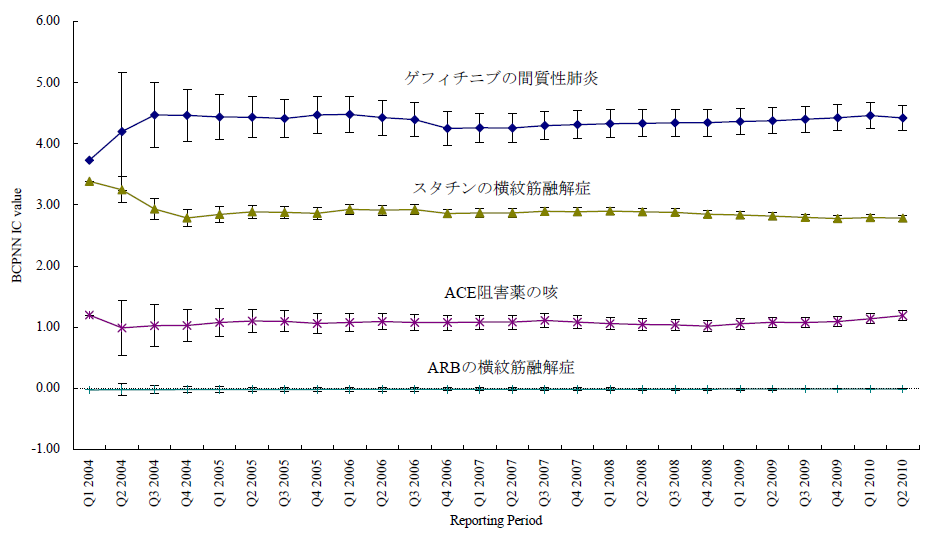

医薬品XについてA以外のすべての有害事象(!Aとする。以下同じ)の件数n(X,!A)とのオッズ比であるn(X,A)/n(X,!A)を計算する。X以外のすべての医薬品についても同様のオッズ比n(!X,A)/n(!X,!A)を計算し、この2つのオッズ比を比較する、すなわち、n(!X,A)/n(!X,!A)に対するn(X,A)/n(X,!A)の比を見ることで、相対的に医薬品Xについて有害事象Aが多く報告されていないかを検出する。これらの数字の比をROR(reporting odds ratio)と呼ぶ。具体的な数字を用いて計算過程を例示するため、2004年の第一四半期の3か月間にFDAに報告された副作用が疑われた症例の報告件数を表のパネルBに示す。この例ではゲフィチニブを興味の対象である医薬品とし、また、興味の対象である副作用を間質性肺炎とした。この3か月間にゲフィチニブが被疑薬として報告された間質性肺炎は22件あり、それ以外の報告事象は159件であった。ゲフィチニブについて、全有害事象報告件数に対する間質性肺炎の報告件数の比は、約0.138であった(パネルC-(1))。これに対して、同じ期間にゲフィチニブ以外の医薬品が被疑薬として報告された間質性肺炎は180件、ゲフィチニブ以外の医薬品が被疑薬として報告された間質性肺炎以外の事象は49983件であった。ゲフィチニブ以外の医薬品について、全有害事象報告件数に対する間質性肺炎の報告件数の比は、約0.00360であった(パネルC-(2))。ゲフィチニブのROR値は0.138と0.00360の比である、約38.3となる。ゲフィチニブについて、それ以外の医薬品と同程度の間質性肺炎が報告されてくると仮定すると期待されるROR値(帰無仮説H0 に基づくROR値)は1.00であるのに対し、計算結果は約38.3であった。閾値をどうするかという議論はあるが、この値は一般的にはシグナルと判断できる程度に大きい値である。ここで、原理を考えていただくためにお示ししたRORは、データベースに入力されている件数が少ない事象や被疑薬としての報告件数が少ない医薬品についての精度が不十分であるとの指摘もある。その精度を上げるべく開発されているものがいくつかある。「はずれ値」を検出するという目的から、neural networkやsupport vector machineといったアルゴリズムを応用することも検討されているが、本稿ではそうしたデータマイニング手法の一つを次の例としてお示しする。それは世界保健機構(World Health Organization, WHO)が開発したBayesian Confidence Propagating Neural Network (BCPNN) という手法であり、BCPNNを用いてFDAが公開しているAdverse Event Reporting System (AERS)データベースのデータを集計し、一般によく知られているいくつかの副作用等を継時的に表現し簡単な解説を加える。